Recently, one paper on compositional vision and knowledge question answeringis accepted by the journal IEEE TPAMI. The full name of IEEE TPAMI is IEEE Transactions on Pattern Analysis and Machine Intelligence, which is an international journal on computer vision with an impact factor of 24.314, announced in 2022. The paper information is as follows:

Difei Gao, Ruiping Wang, Shiguang Shan, and Xilin Chen. "CRIC: A VQA Dataset for Compositional Reasoning on Vision and Commonsense," IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2022. (Accepted on Sep 13th, 2022).

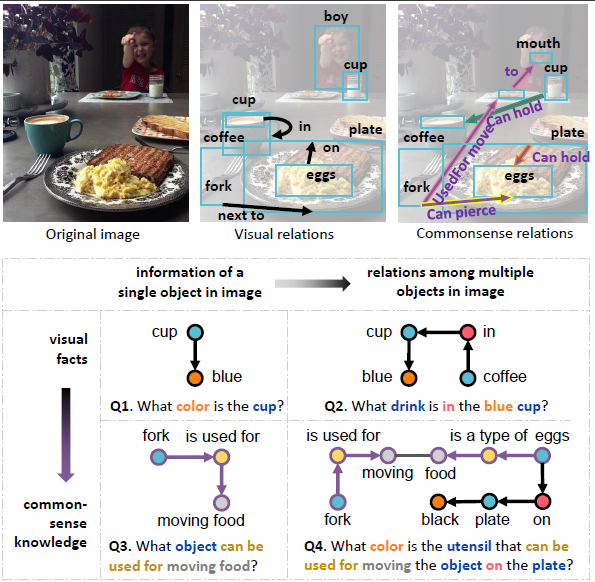

To evaluate the abilities of visual question answering (VQA) systems in real scenarios, we need to require them to not only understand the visual relationship between objects in the image, the non-visual knowledge about a single object, but also to further infer the non-visual relationship between objects, and perform multi-hop reasoning. For example, to answer the question Q4 in the figure below, "what color is the utensil that can be used for moving the object on the plate?", the model not only needs to (1) identify the “egg”, “plate”, and “fork”, (2) infer that “an egg is on the plate” based on "what it sees", and more importantly (3) infer implicit non-visual commonsense relationships between objects, “a fork can move an egg”, based on "what it knows" about the world, and (4) answer the question by performing multi-hop reasoning upon above three subtasks. Existing VQA datasets mainly focus on (1) (2) abilities, and are hard to evaluate the more complex and practical (3) (4) abilities. Therefore, we propose a new VQA task requiring Compositional Reasoning on vIsion and Commonsense, named CRIC, construct a large-scale VQA dataset, and systematically analyze various representative VQA models.

The main contributions of this paper are summarized as follows:

1) We design a novel compositional vision and knowledge VQA task, and propose a semi-automatic dataset construction method that can utilize the scene graph and knowledge graph to generate questions.

2) Several representative VQA models are modified to enable joint reasoning on visual facts in images and external knowledge beyond images.

3) We conduct experiments with many representative VQA models on CRIC dataset, analyze their limitations in joint reasoning between vision and knowledge, and show the potential directions of model design.

Project Page : https://cricvqa.github.io/

Download: