Congratulations! VIPL's 3 papers are accepted by ACM MM 2022! ACM MM is the premier international conference in multimedia. ACM MM 2022 will be held in October 2022 in Lisbon, Portugal. The 3 papers are summarized as follows (in the order of title):

1. Zero-shot Video Classification with Appropriate Web and Task Knowledge Transfer (Junbao Zhuo, Yan Zhu, Shuhao Cui, Shuhui Wang, Bin MA, Qingming Huang, Xiaoming Wei, Xiaolin Wei)

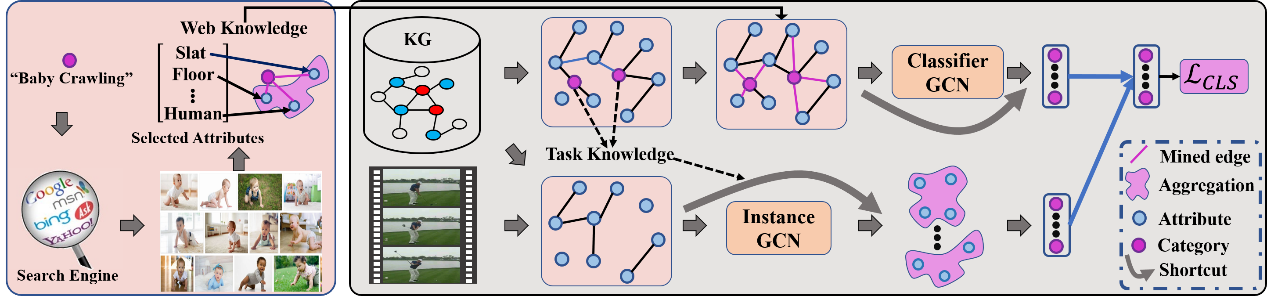

Zero-shot video classification (ZSVC) that aims to recognize video classes that have never been seen during model training, has become a thriving research direction. ZSVC is achieved by building mappings between visual and semantic embeddings. Recently, ZSVC has been achieved by automatically mining the underlying objects in videos as attributes and incorporating external commonsense knowledge. However, the object mined from seen categories can not generalized to unseen ones. Besides, the category-object relationships are usually extracted from commonsense knowledge or word embedding, which is not consistent with video modality. To tackle these issues, we propose to mine associated objects and category-object relationships for each category from retrieved web images. The associated objects of all categories are employed as generic attributes and the mined category-object relationships could narrow the modality inconsistency for better knowledge transfer. Another issue of existing ZSVC methods is that the model sufficiently trained with labeled seen categories may not generalize well to distinct unseen categories. To encourage a more reliable transfer, we propose Task Similarity aware Representation Learning (TSRL). In TSRL, the similarity between seen categories and the unseen ones is estimated and used to regularize the model in an appropriate way. As shown in Fig. 1, we construct a model for ZSVC based on the constructed attributes, the mined category-object relationships and the proposed TSRL. Experimental results on four public datasets, i.e., FCVID, UCF101, HMDB51 and Olympic Sports, show that our model performs favorably against state-of-the-art methods. Our codes are publicly available at https://github.com/junbaoZHUO/TSRL.

Figure 1 The framework of our method

2. Concept Propagation via Attentional Knowledge Graph Reasoning for Video-Text Retrieval (Sheng Fang, Shuhui Wang, Junbao Zhuo, Qingming Huang, Bin MA, Xiaoming Wei, Xiaolin Wei)

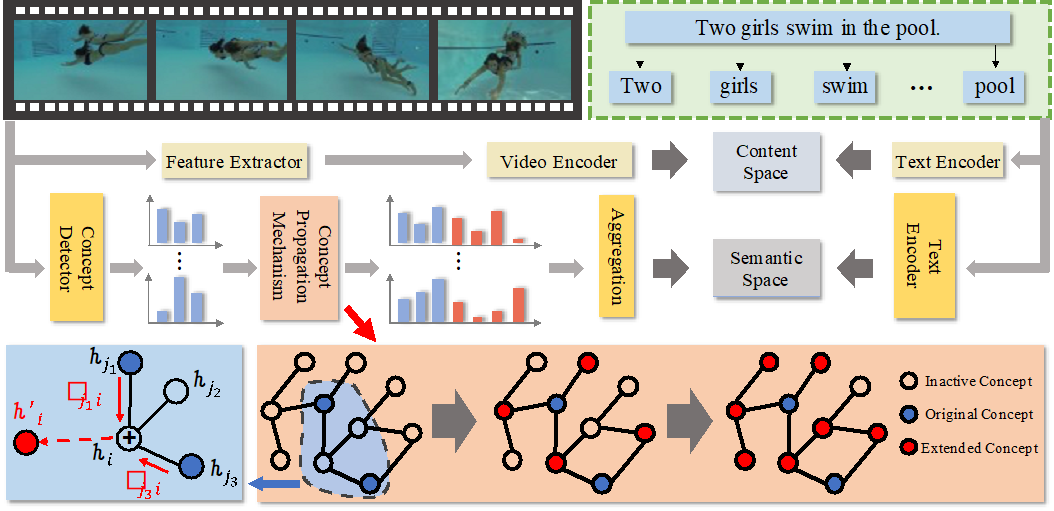

Due to the rapid growth of online video data, video-text retrieval techniques are in urgent need, which aim to search for the most relevant video given a natural language caption and vice versa. The major challenge of this task is how to identify the true fine-grained semantic correspondence between videos and texts, using only the document-level correspondence. To deal with this issue, we propose a simple yet effective two-stream framework which takes the concept information into account and introduces a new branch of semantic-level matching. We further propose a concept propagation mechanism for mining the latent semantics in videos and achieving enriched representations. The concept propagation is achieved by building a commonsense graph distilled from ConceptNet with concepts extracted from videos and captions. The original concepts of videos are detected by pretrained detectors as the initial concept representations. By conducting attentional graph reasoning on the commonsense graph with the guidance of external knowledge, we can extend some new concepts in a detector-free manner for further enriching the video representations. In addition, a propagated BCE loss is designed for supervising the concept propagation procedure. Common space learning is then constructed for cross-modal matching. We conduct extensive experiments on various baseline models and several benchmark datasets. Promising experimental results demonstrate the effectiveness and generalization ability of our method.

Figure 2 Overview of ACP framework

3. Synthesizing Counterfactual Samples for Effective Image-Text Matching (Hao Wei, Shuhui Wang, Xinzhe Han, Zhe Xue, Bin Ma, Xiaoming Wei, Xiaolin Wei)

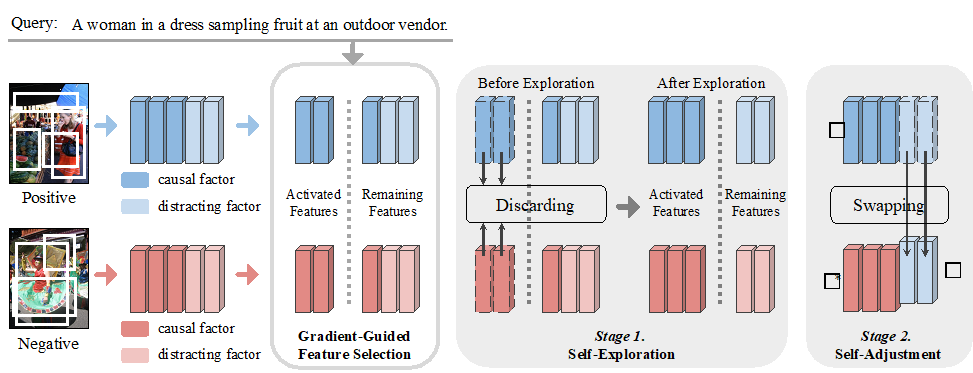

Image-text matching is a fundamental building block for vision- language tasks, which measures the visual-semantic similarity between image and text. In order to discover such fine-grained correspondences, recent works employ the hard negative mining strategy. However, the truly informative negative samples are quite sparse in the training data, which are hard to obtain only in a randomly sampled mini-batch. Motivated by causal inference, we aim to overcome this shortcoming by carefully analyzing the analogy between hard negative mining and causal effects optimizing. Further, we propose Counterfactual Matching (CFM) framework for more effective image-text correspondence mining. CFM contains three major components, i.e., Gradient-Guided Feature Selection for automatic casual factor identification, Self-Exploration for causal factor completeness, and Self-Adjustment for counterfactual sample synthesis. Compared with traditional hard negative mining, our method largely alleviates the over-fitting phenomenon and effectively captures the fine-grained correlations between image and text modality. We evaluate our CFM in combination with three state-of-the-art image-text matching architectures. Quantitative and qualitative experiments conducted on two publicly available datasets demonstrate its strong generality and effectiveness.

Figure 3 The framework of our method

Download: