Congratulations! ECCV2022 shows that there are VIPL's 6 papers are accepted! ECCV is the top European conference in the image analysis area. ECCV2022 will be held in October 2022 in Tel Aviv, Israel. The 6 papers are summarized as follows:

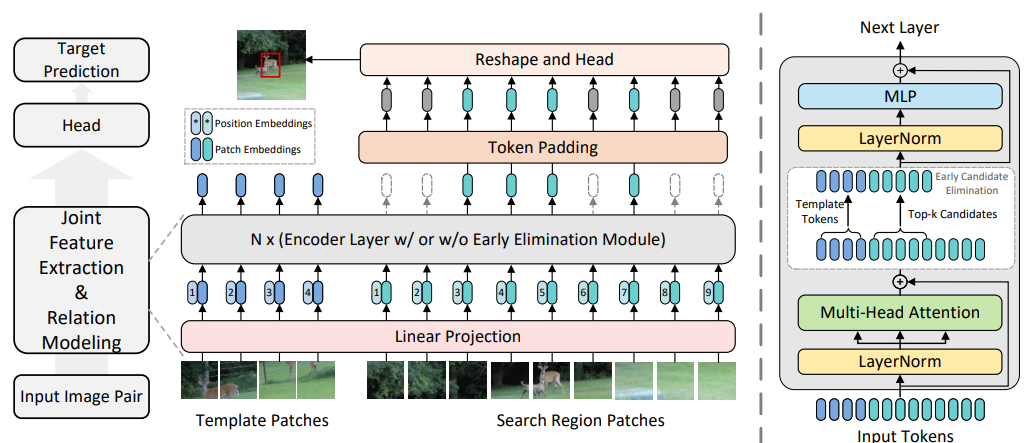

1. Joint Feature Learning and Relation Modeling for Tracking: A One-Stream Framework (Botao Ye, Hong Chang, Bingpeng Ma, and Shiguang Shan)

The current popular two-stream tracking framework extracts the template and the search region features separately and then performs relation modeling, thus the extracted features lack the awareness of the target and have limited target-background discriminability. To tackle the above issue, we propose a novel one-stream tracking (OSTrack) framework that unifies feature learning and relation modeling by bridging the template-search image pairs with bidirectional information flows. In this way, discriminative target-oriented features can be dynamically extracted by mutual guidance. Since no extra heavy relation modeling module is needed and the implementation is highly parallelized, the proposed tracker runs at a fast speed. To further improve the inference efficiency, an in-network candidate early elimination module is proposed based on the strong similarity prior calculated in the one-stream framework. OSTrack achieves state-of-the-art performance on multiple benchmarks, maintains a good performance-speed trade-off and shows faster convergence.

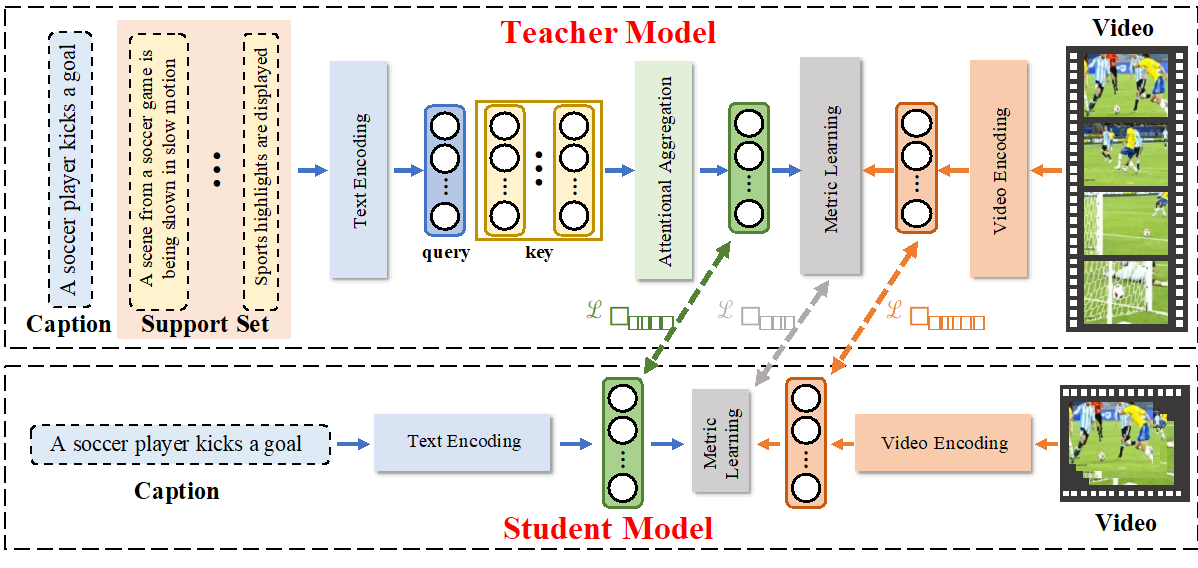

2. Learning Linguistic Association Towards Efficient Text-Video Retrieval (Sheng Fang, Shuhui Wang, Junbao Zhuo, Xinzhe Han, Qingming Huang)

Text-video retrieval attracts growing attention recently. A dominant approach is to learn a common space for aligning two modalities. However, video deliver richer content than text in general situations and captions usually miss certain events or details in the video. The information imbalance between two modalities makes it difficult to align their representations. We propose a general framework, LINguistic Association (LINAS), which utilizes the complementarity between captions corresponding to the same video. Concretely, we first train a teacher model taking extra relevant captions as inputs, which can aggregate language semantics for obtaining more comprehensive text representations. Since the additional captions are inaccessible during inference, Knowledge Distillation is employed to train a student model with a single caption as input. We further propose Adaptive Distillation strategy, which allows the student model to adaptively learn the knowledge from the teacher model. This strategy also suppresses the spurious relations introduced during the linguistic association. Extensive experiments demonstrate the effectiveness and efficiency of LINAS with various baseline architectures on benchmark datasets.

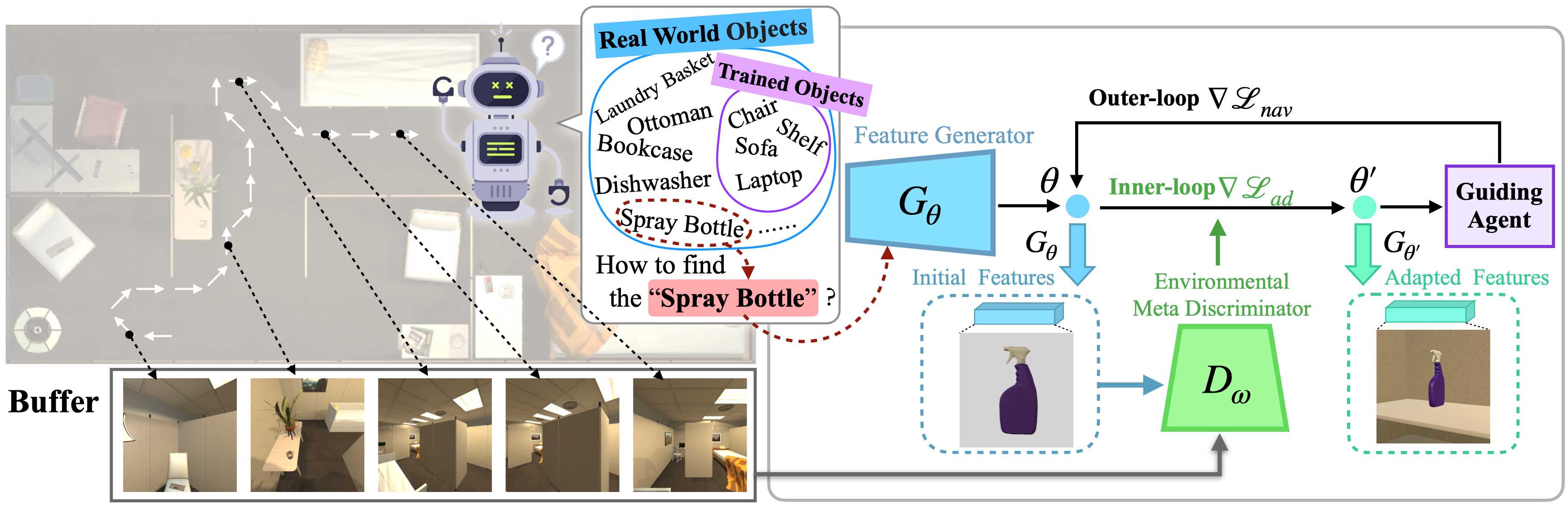

3. Generative Meta-Adversarial Network for Unseen Object Navigation (Sixian Zhang, Weijie Li, Xinhang Song, Yubing Bai, Shuqiang Jiang)

Object navigation is a task to let the agent navigate to a target object. Prevailing works attempt to expand navigation ability in new environments and achieve reasonable performance on the seen object categories that have been observed in training environments. However, this setting is somewhat limited in real world scenario, where navigating to unseen object categories is generally unavoidable. In this paper, we focus on the problem of navigating to unseen objects in new environments only based on limited training knowledge. Same as the common ObjectNav tasks, our agent still gets the egocentric observation and target object category as the input and does not require any extra inputs. Our solution is to let the agent : "imagine" the unseen object by synthesizing features of the target object. We propose a generative meta-adversarial network (GMAN), which is mainly composed of a feature generator and an environmental meta discriminator, aiming to generate features for unseen objects and new environments in two steps. The former generates the initial features of the unseen objects based on the semantic embedding of the object category. The latter enables the generator to further learn the background characteristics of the new environment, progressively adapting the generated features to approximate the real features of the target object. The adapted features serve as a more specific representation of the target to guide the agent. Moreover, to fast update the generator with a few observations, the entire adversarial framework is learned in the gradient-based meta-learning manner. The experimental results on AI2THOR and RoboTHOR simulators demonstrate the effectiveness of the proposed method in navigating to unseen object categories.

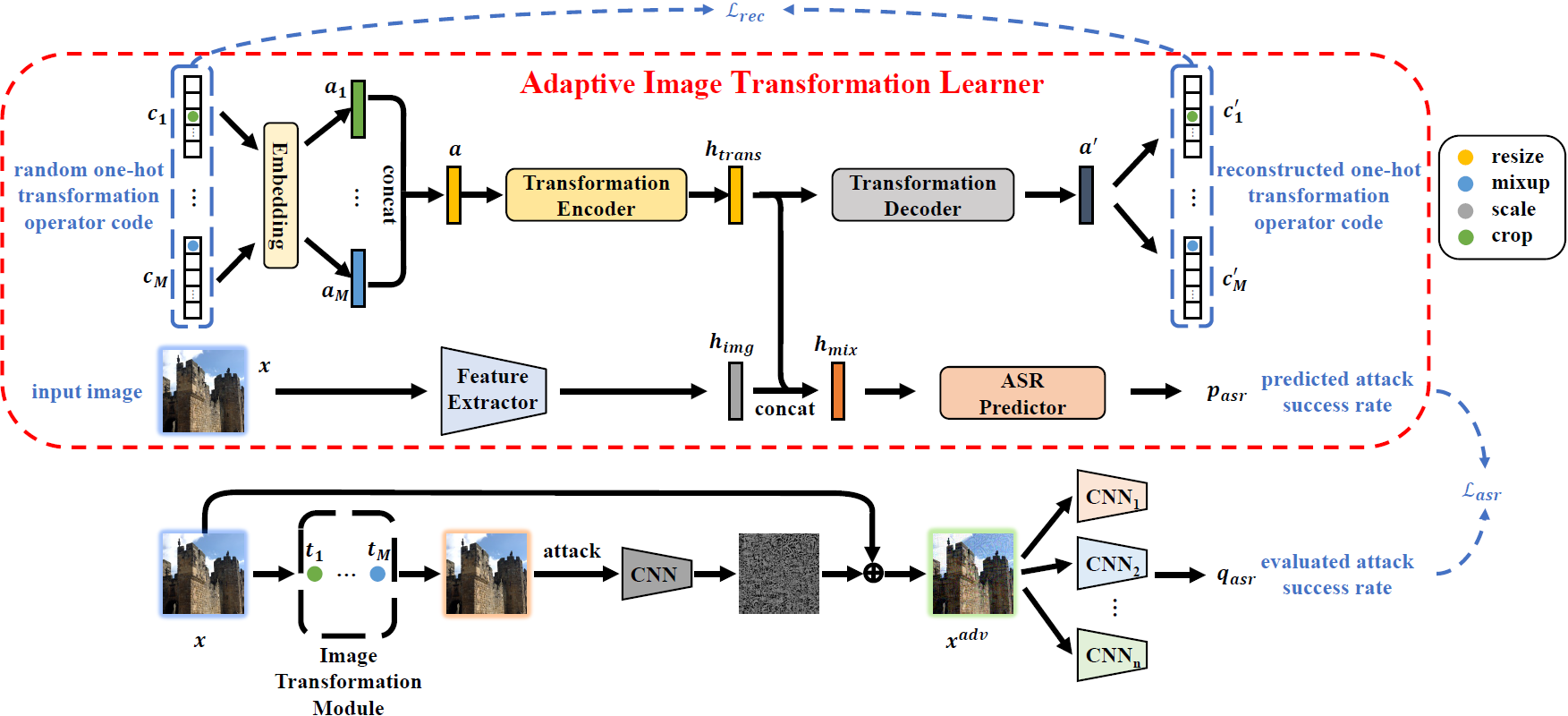

4. Adaptive Image Transformations for Transfer-based Adversarial Attack (Zheng Yuan, Jie Zhang, Shiguang Shan)

Adversarial attacks provide a good way to study the robustness of deep learning models. One category of methods in transfer-based black-box attack utilizes several image transformation operations to improve the transferability of adversarial examples, which is effective, but fails to take the specific characteristic of the input image into consideration. In this work, we propose a novel architecture, called Adaptive Image Transformation Learner (AITL), which incorporates different image transformation operations into a unified framework to further improve the transferability of adversarial examples. Unlike the fixed combinational transformations used in existing works, our elaborately designed transformation learner adaptively selects the most effective combination of image transformations specific to the input image. Extensive experiments on ImageNet demonstrate that our method significantly improves the attack success rates on both normally trained models and defense models under various settings.

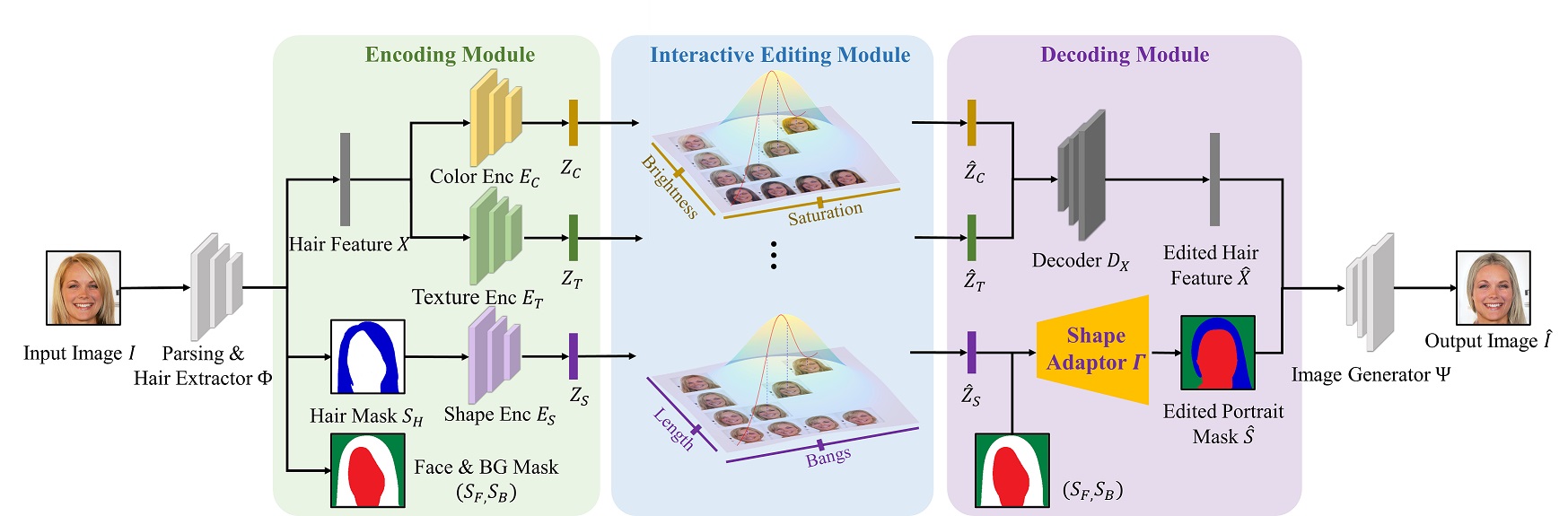

5. GAN with Multivariate Disentangling for Controllable Hair Editing (Xuyang Guo, Meina Kan, Tianle Chen, Shiguang Shan)

Hair editing is an essential but challenging task in portrait editing considering the complex geometry and material of hair. Existing methods have achieved promising results by editing through a reference photo, user-painted mask, or guiding strokes. However, when a user provides no reference photo or hardly paints a desirable mask, these works fail to edit. Going a further step, we propose an efficiently controllable method that can provide a set of sliding bars to do continuous and fine hair editing. Meanwhile, it also naturally supports discrete editing through a reference photo and user-painted mask. Specifically, we propose a generative adversarial network with a multivariate Gaussian disentangling module. Firstly, an encoder disentangles the hair’s major attributes color, texture, and shape to separate latent representations. The latent representation of each attribute is modeled as a standard multivariate Gaussian distribution, to make each dimension of an attribute be changed continuously and finely. Benefiting from the Gaussian distribution, any manual editing including sliding a bar, providing a reference photo, and painting a mask can be easily made, which is flexible and friendly for users to interact. Finally, with changed latent representations, the decoder outputs a portrait with the edited hair. Experiments show that our method can edit each dimension of each attribute continuously and separately.

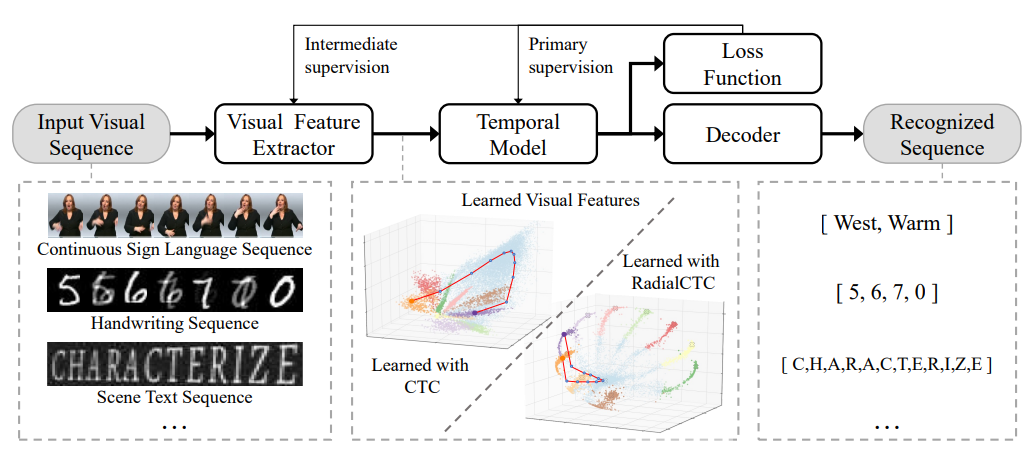

6. Deep Radial Embedding for Visual Sequence Learning (Yuecong Min, Peiqi Jiao, Yannan Li, Xiaotao Wang, Lei Lei, Xiujuan Chai, Xilin Chen)

Connectionist Temporal Classification (CTC) is a popular objective function in sequence recognition, which provides supervision for unsegmented sequence data through aligning sequence and its corresponding labeling iteratively. The blank class of CTC plays a crucial role in the alignment process and is often considered responsible for the peaky behavior of CTC. In this study, we propose an objective function named RadialCTC that constrains sequence features on a hypersphere while retaining the iterative alignment mechanism of CTC. The learned features of each non-blank class are distributed on a radial arc from the center of the blank class, which provides a clear geometric interpretation and makes the alignment process more efficient. Besides, RadialCTC can control the peaky behavior by simply modifying the logit of the blank class. Experimental results of recognition and localization demonstrate the effectiveness of RadialCTC on two sequence recognition applications.

Download: