Congratulations! VIPL's research paper “CMOS-GAN: Semi-supervised Generative Adversarial Model for Cross-Modality Face Image Synthesis” was recently accepted by IEEE TIP. The full name of IEEE TIP is IEEE Transactions on Image Processing, which is an international journal on computer vision and image processing, with a current impact factor of 11.041.

Shikang Yu, Hu Han, Shiguang Shan, and Xilin Chen. CMOS-GAN: Semi-supervised Generative Adversarial Model for Cross-Modality Face Image Synthesis, IEEE Transactions on Image Processing, 2022. (accepted) [Code]

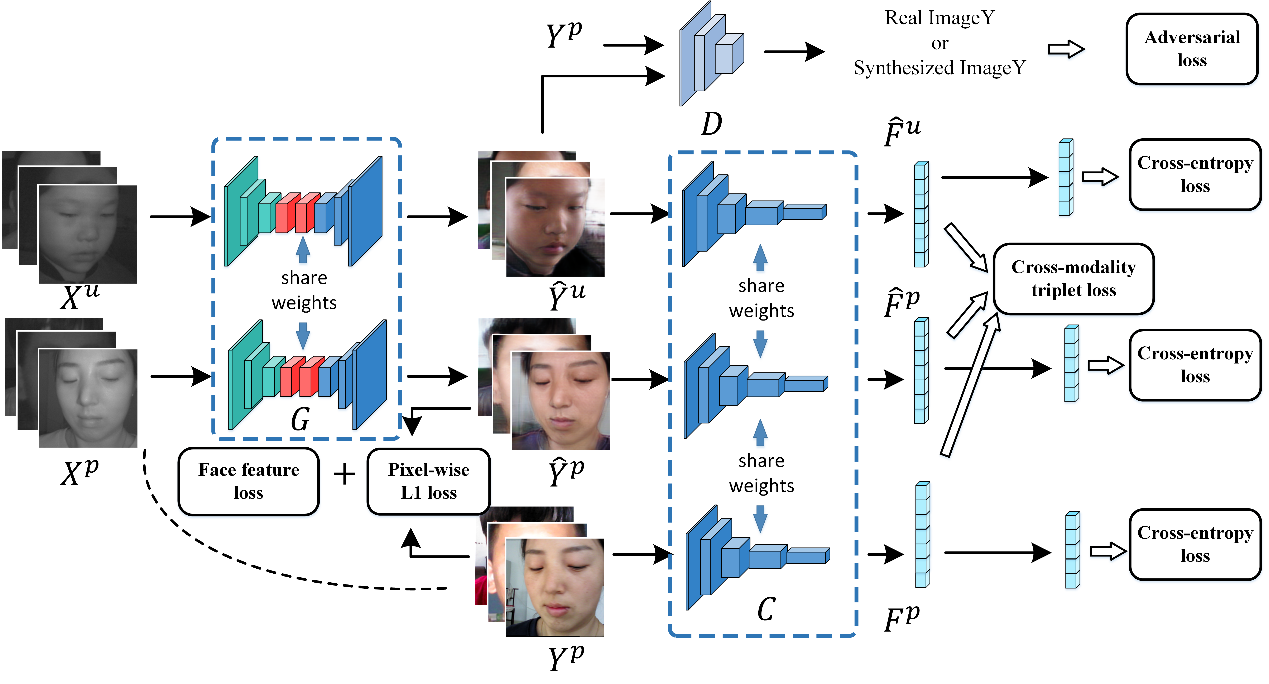

Cross-modal face image synthesis (such as sketch-to-photo, near-infrared-to-visible, and visible-to-depth, etc.) has a wide range of applications in face recognition, facial animation, and film and television entertainment. Existing cross-modality face image synthesis methods often rely on paired multi-modality data for fully supervised learning. However, in practical applications, the scale of paired multimodal images is usually limited, while unpaired single-modality face images are abundant. To this end, we propose a semi-supervised cross-modality face image synthesis method (CMOS-GAN), which utilizes paired multimodal images and unpaired single-modality images to achieve more accurate and robust cross-modality face images synthesis. As shown in Figure 1, CMOS-GAN is based on the traditional encoder-decoder generator architecture, and designs pixel-level loss, adversarial loss, classification loss and face feature loss, and realizes the mining and utilization of useful information in paired multi-modal images and unpaired single-modal images through a two-step training. For a common application of cross-modal face image synthesis—achieving cross-modal face recognition, we also propose a revised triplet loss, so that the synthesized face image can better preserve the identity information in the original image. We perform 3 cross-modal face synthesis tasks based on 5 public datasets (VIPL-MumoFace-3K, RealSenseII RGBD, BUAA Lock3DFace, CUFS and CUFSF) to verify the effectiveness of the method, and compare it with the state-of-the-art (supervised and semi-supervised) cross-modal face synthesis methods.

In addition, we also construct a large-scale multimodal face dataset (VIPL-MumoFace-3K), which contains more than 1.4 million trimodal (RGB-D-NIR) face image pairs from more than 3000 subjects. The scale of this dataset is far larger than the largest public dataset in the field, and it can be used for research on cross-modal face synthesis and recognition methods.

Fig. 1 The architecture of the proposed semi-supervised cross-modality face synthesis method (CMOS-GAN)

Download: