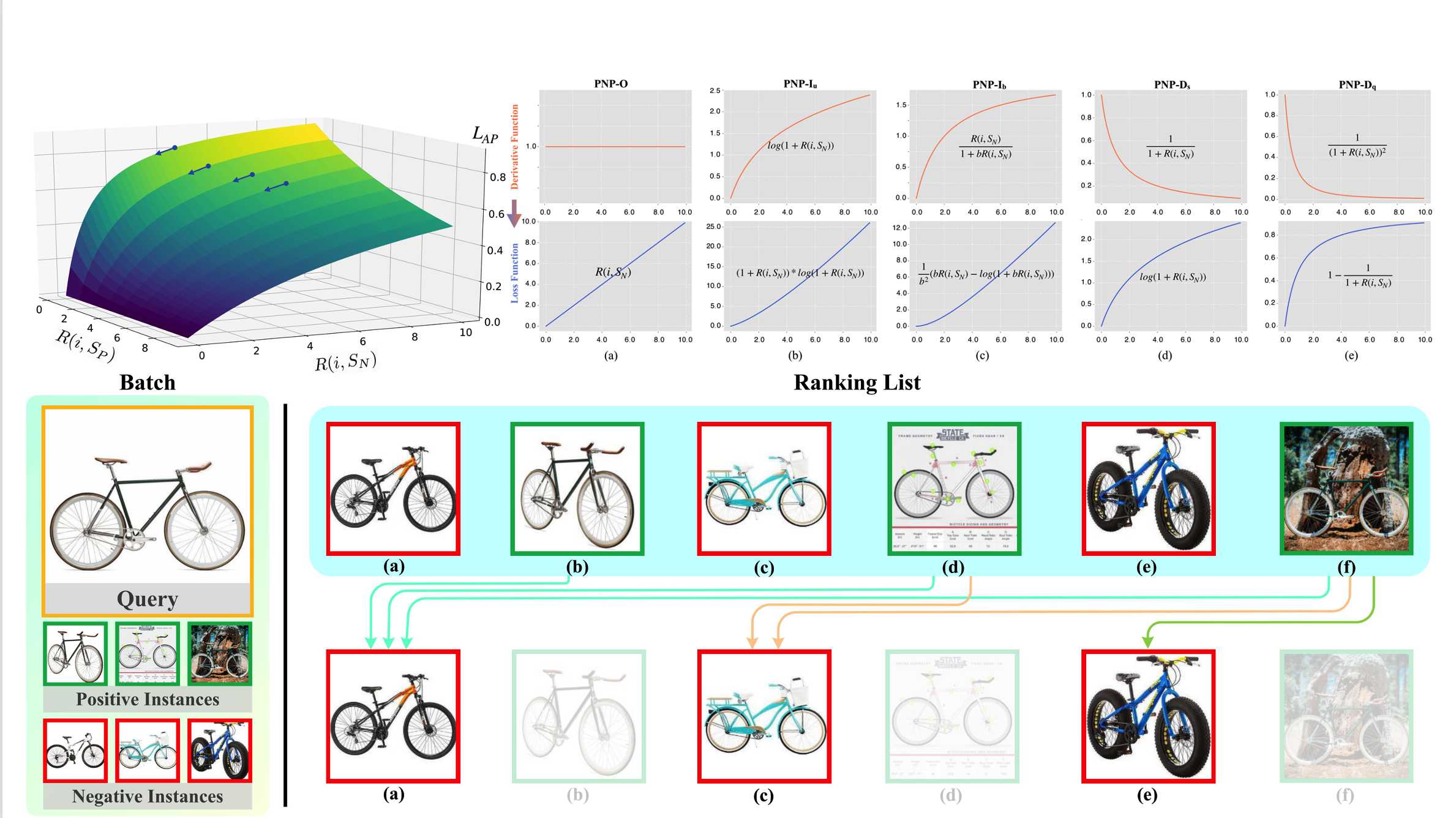

优化平均精度(AP)的近似值在图像检索中得到了广泛的研究。受限于AP的定义,这种方法必须考虑每个正例之前的负例和正例。然而,我们认为只要惩罚正例前面的负例就够了,因为损失只来自这些样本。为此,我们提出了一种新的损失函数,即PNP损失函数,它可以直接最小化每个正例之前的负例数。此外,基于AP的方法采用固定次优的梯度分配策略。为此,我们通过构造损失的导数函数的方式,系统地研究了不同的梯度分配方案,得到了导函数递增的PNP-I和导函数递减的PNP-D。PNP-I通过向困难正例分配更大的梯度而关注难例,并尝试使所有相关实例更接近。相比之下,PNP-D对此类事件关注较少,并缓慢纠正这些样本。对于大多数真实数据,一个类通常包含多个局部簇。PNP-I盲目地聚集这些簇,而PNP-D保持了原始的数据分布。因此,PNP-D更为优越。在三个标准检索数据集上的评估验证了上述分析的正确性,且PNP-D达到了当前最好的性能。

Abstract

Optimising the approximation of Average Precision (AP) has been widely studied for image retrieval. Limited by the definition of AP, such methods consider both negative and positive instances ranking before each positive instance. However, we claim that only penalizing negative instances before positive ones is enough, because the loss only comes from these negative instances. To this end, we propose a novel loss, namely Penalizing Negative instances before Positive ones (PNP), which can directly minimize the number of negative instances before each positive one. In addition, AP-based methods adopt a fixed and sub-optimal gradient assignment strategy. Therefore, we systematically investigate different gradient assignment solutions via constructing derivative functions of the loss, resulting in PNP-I with increasing derivative functions and PNP-D with decreasing ones. PNP-I focuses more on the hard positive instances by assigning larger gradients to them and tries to make all relevant instances closer. In contrast, PNP-D pays less attention to such instances and slowly corrects them. For most real-world data, one class usually contains several local clusters. PNP-I blindly gathers these clusters while PNP-D keeps them as they were. Therefore, PNP-D is more superior. Experiments on three standard retrieval datasets show consistent results with the above analysis. Extensive evaluations demonstrate that PNP-D achieves the state-of-the-art performance.

Zhuo Li, Weiqing Min, Jiajun Song, Yaohui Zhu, Liping Kang, Xiaoming Wei, Xiaolin Wei, Shuqiang Jiang, "Rethinking the Optimization of Average Precision: Only Penalizing Negative Instances before Positive Ones is Enough", 36th AAAI Conference on Artificial Intelligence (AAAI 2022), Vancouver, BC, Canada, Feb.22 - Mar.1, 2022.

Download: