Abstract

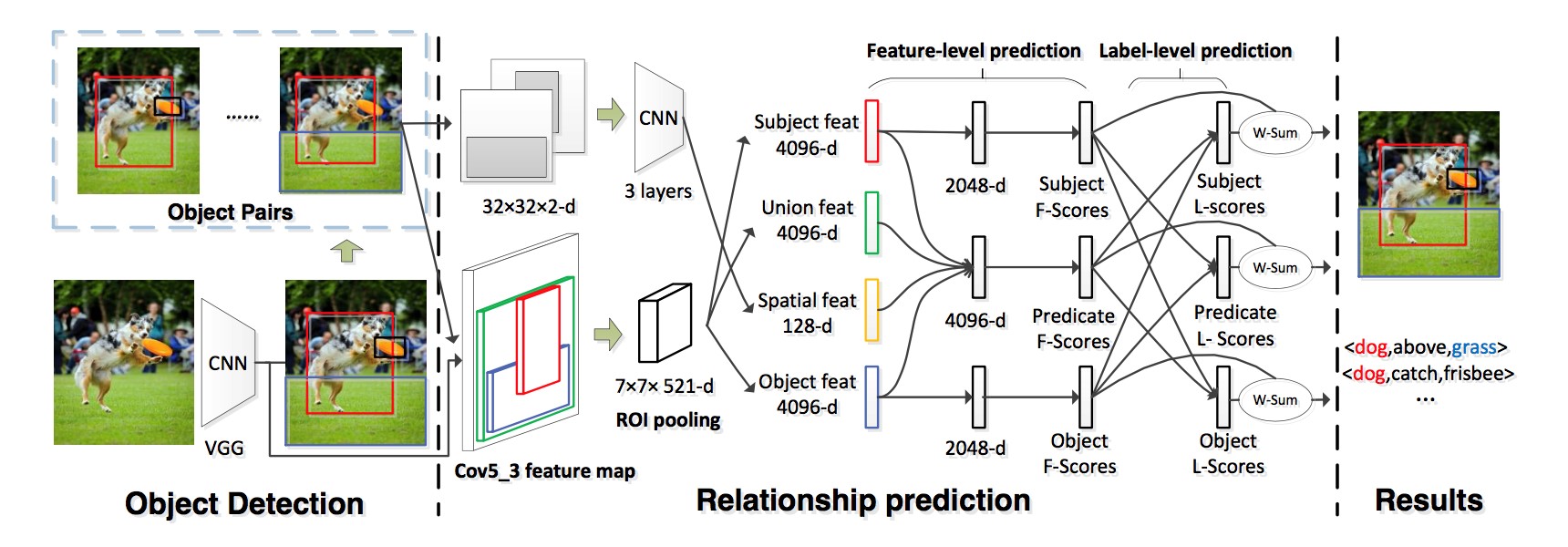

In the research area of computer vision and artificial intelligence, learning the relationships of objects is an important way to deeply understand images. Most of recent works detect visual relationship by learning objects and predicates respectively in feature level, but the dependencies between objects and predicates have not been fully considered. In this paper, we introduce deep structured learning for visual relationship detection. Specifically, we propose a deep structured model, which learns relationship by using feature-level pre- diction and label-level prediction to improve learning ability of only using feature-level predication. The feature-level pre- diction learns relationship by discriminative features, and the label-level prediction learns relationships by capturing dependencies between objects and predicates based on the learnt relationship of feature level. Additionally, we use structured SVM (SSVM) loss function as our optimization goal, and decompose this goal into the subject, predicate, and object optimizations which become more simple and more independent. Our experiments on the Visual Relationship Detection (VRD) dataset and the large-scale Visual Genome (VG) dataset validate the effectiveness of our method, which out- performs state-of-the-art methods.

Yaohui Zhu, Shuqiang Jiang, Deep Structured Learning for Visual Relationship Detection. Thirty-Second AAAI Conference on Artificial Intelligence (AAAI2018), February 2-7, 2018, New Orleans, Lousiana, USA

Download: