Abstract

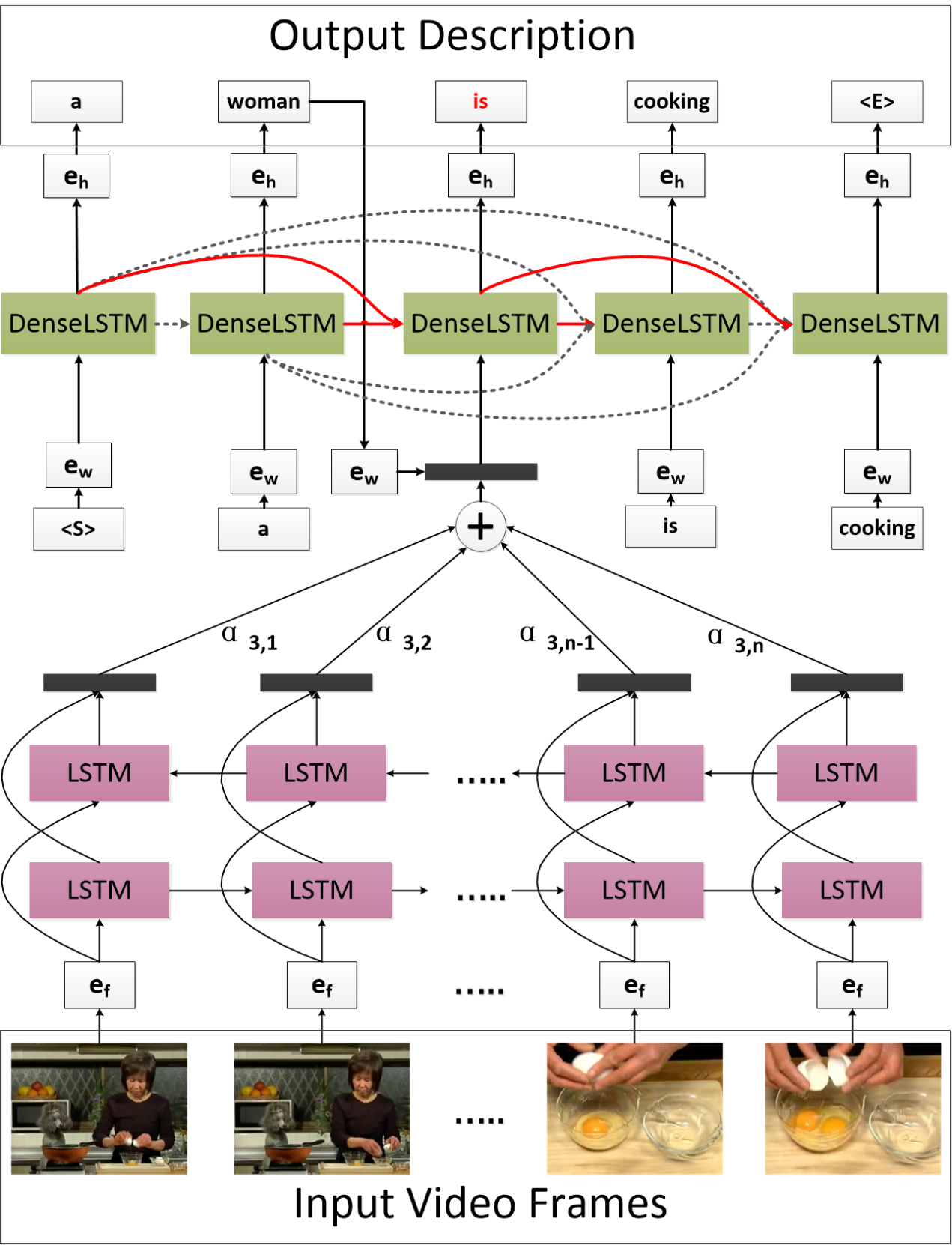

Recurrent Neural Networks (RNNs), especially the Long Short-Term Memory (LSTM), have been widely used for video captioning, since they can cope with the temporal dependencies within both video frames and the corresponding descriptions. However, as the sequence gets longer, it becomes much harder to handle the temporal dependencies within the sequence. And in traditional LSTM, previously generated hidden states except the last one do not work directly to predict the current word. This may lead to the predicted word highly related to the last few states other than the overall context. To better capture long-range dependencies and directly leverage early generated hidden states, in this work, we propose a novel model named Attention-based Densely Connected Long Short-Term Memory (DenseLSTM). In DenseLSTM, to ensure maximum information flow, all previous cells are connected to the current cell, which makes the updating of the current state directly related to all its previous states. Furthermore, an attention mechanism is designed to model the impacts of different hidden states. Because each cell is directly connected with all its successive cells, each cell has direct access to the gradients from later ones. In this way, the long-range dependencies are more effectively captured. We perform experiments on two publicly used video captioning datasets: the Microsoft Video Description Corpus (MSVD) and the MSR-VTT, and experimental results illustrate the effectiveness of DenseLSTM.

Yongqing Zhu, Shuqiang Jiang. Attention-based Densely Connected LSTM for Video Captioning. (ACM Multimedia 2019), 21-25 October 2019, Nice, France.

Download: