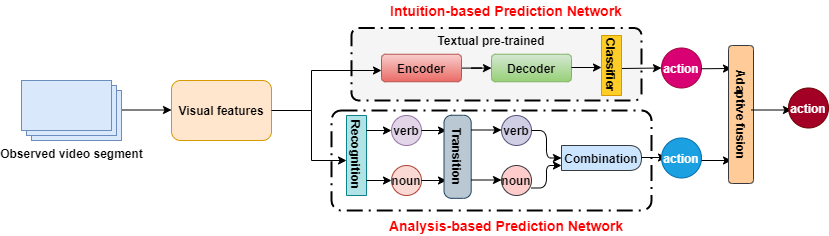

第一视角行为预测需要机器从人的视角预测出接下来可能发生的行为,在人机交互、康复助力等领域具有广泛的应用价值。本文提出了一个融入直觉和分析的第一视角行为预测模型,主要由基于直觉的预测网络、基于分析的预测网络、自适应融合网络三部分组成。我们将基于直觉的预测网络设计成类似黑箱的编码器-解码器结构,将基于分析的预测网络设计成识别、转移和结合三个步骤,并在自适应融合网络中引入注意力机制,将直觉和分析两部分的结果进行有机融合,得到最终的预测结果。

Abstract

In this paper, we focus on egocentric action anticipation from videos, which enables various applications, such as helping intelligent wearable assistants understand users' needs and enhance their capabilities in the interaction process. It requires intelligent systems to observe from the perspective of the first person and predict an action before it occurs. Most existing methods rely only on visual information, which is insufficient especially when there exists salient visual difference between past and future. In order to alleviate this problem, which we call visual gap in this paper, we propose one novel Intuition-Analysis Integrated (IAI) framework inspired by psychological research, which mainly consists of three parts: Intuition-based Prediction Network (IPN), Analysis-based Prediction Network (APN) and Adaptive Fusion Network (AFN). To imitate the implicit intuitive thinking process, we model IPN as an encoder-decoder structure and introduce one procedural instruction learning strategy implemented by textual pre-training. On the other hand, we allow APN to process information under designed rules to imitate the explicit analytical thinking, which is divided into three steps: recognition, transitions and combination. Both the procedural instruction learning strategy in IPN and the transition step of APN are crucial to improving the anticipation performance via mitigating the visual gap problem. Considering the complementarity of intuition and analysis, AFN adopts attention fusion to adaptively integrate predictions from IPN and APN to produce the final anticipation results. We conduct extensive experiments on the largest egocentric video dataset. Qualitative and quantitative evaluation results validate the effectiveness of our IAI framework, and demonstrate the advantage of bridging visual gap by utilizing multi-modal information, including both visual features of observed segments and sequential instructions of actions.

Tianyu Zhang, Weiqing Min, Ying Zhu, Yong Rui, Shuqiang Jiang. 2020. An Egocentric Action Anticipation Framework via Fusing Intuition and Analysis. In Proceedings of the 28th ACM International Conference on Multimedia (MM ’20), October 12–16, 2020, Seattle, WA, USA. ACM, New York, NY, USA, 9 pages. https://doi.org/10.1145/3394171.3413964

Download: