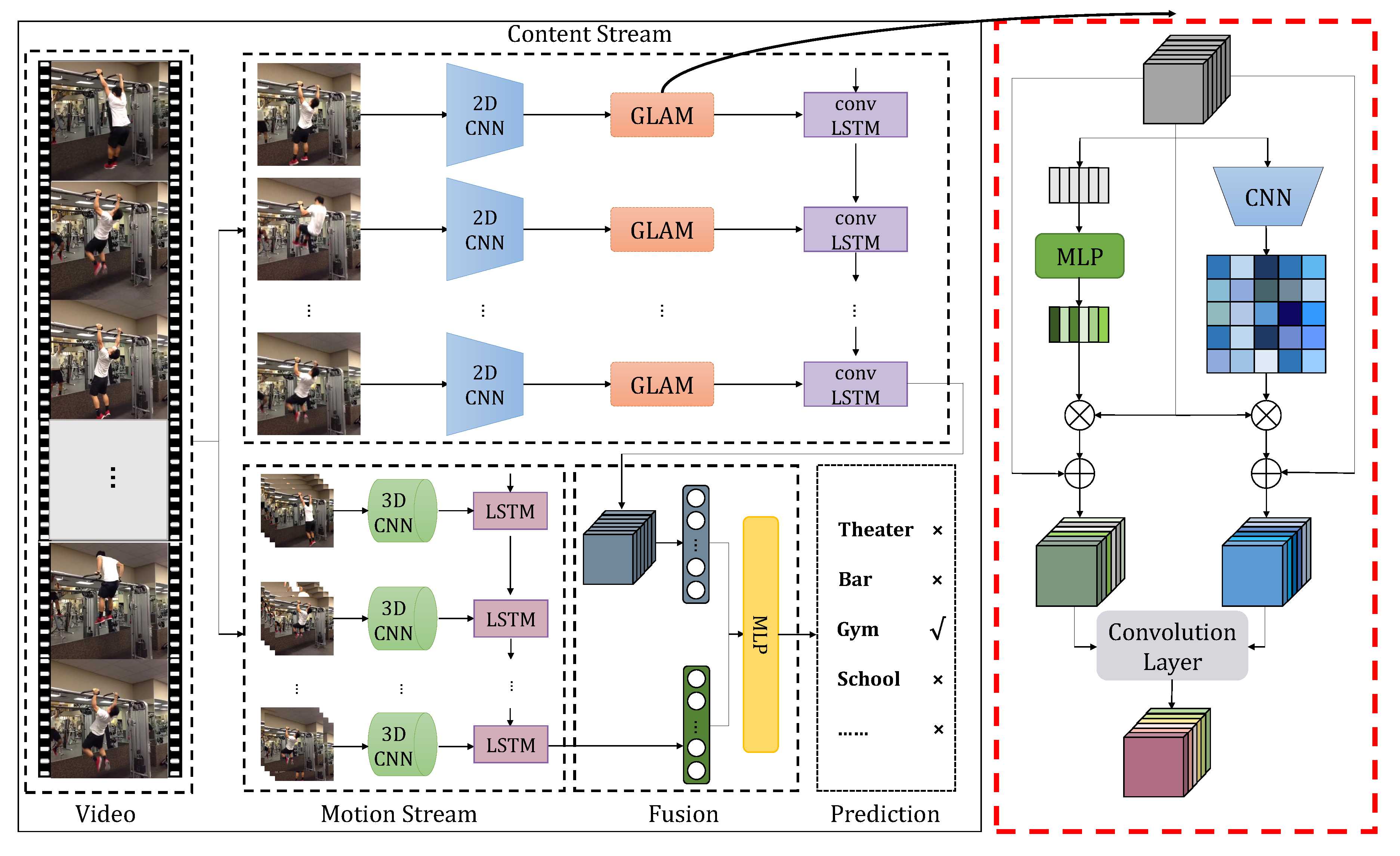

随着社交媒体平台的发展,如Facebook和Vine,越来越多的用户喜欢在这些平台上分享自己的日常生活,而移动设备的普及,促进了海量多媒体数据的产生。用户在分享日常的时候,考虑到隐私问题,往往是没有地理位置标注的,这就限制了地点识别和推荐系统的发展。随着多媒体数据的不断增加以及人工智能领域特别是深度学习的发展,视频场所识别任务应运而生。该任务以视频作为输入,判别该视频所发生的场所,在个性化餐馆推荐、用户隐私检测等方面有着广泛的应用前景。本文在项目组前期工作(Video Venue Prediction: [Jiang2018-IEEE TMM])的研究基础上,提出了一个新的网络模型HA-TSFN。该模型考虑了全局信息和局部信息,并使用全局-局部注意力机制来捕获视频中的场景和物体信息,从而增强视觉信息的表达。同时,在大规模视频场所数据集Vine上进行了实验分析和验证。

[Jiang2018-IEEE TMM] Shuqiang Jiang, Weiqing Min Shuhuan Mei, “Hierarchy-dependent cross-platform multi-view feature learning for venue category prediction,” IEEE Transactions on Multimedia, vol. 21, no. 6, pp. 1609–1619, 2018

Abstract

Video venue category prediction has been drawing more attention in the multimedia community for various applications such as personalized location recommendation and video verification. Most of existing works resort to the information from either multiple modalities or other platforms for strengthening video representations. However, noisy acoustic information, sparse textual descriptions and incompatible cross-platform data could limit the performance gain and reduce the universality of the model. Therefore, we focus on discriminative visual feature extraction from videos by introducing a hybrid-attention structure. Particularly, we propose a novel Global-Local Attention Module (GLAM), which can be inserted to neural networks to generate enhanced visual features from video content. In GLAM, the Global Attention (GA) is used to catch contextual scene-oriented information via assigning channels with various weights while the Local Attention (LA) is employed to learn salient object-oriented features via allocating different weights for spatial regions. Moreover, GLAM can be extended to ones with multiple GAs and LAs for further visual enhancement. These two types of features respectively captured by GAs and LAs are integrated via convolution layers, and then delivered into convolutional Long Short-Term Memory (convLSTM) to generate spatial-temporal representations, constituting the content stream. In addition, video motions are explored to learn long-term movement variations, which also contributes to video venue prediction. The content and motion stream constitute our proposed Hybrid-Attention Enhanced Two-Stream Fusion Network (HA-TSFN). HA-TSFN finally merges the features from two streams for comprehensive representations. Extensive experiments demonstrate that our method achieves the state-of-the-art performance in the large-scale dataset Vine. The visualization also shows that the proposed GLAM can capture complementary scene-oriented and object-oriented visual features from videos.

Yanchao Zhang, Weiqing Min, Liqiang Nie, Shuqiang Jiang. “Hybrid-Attention Enhanced Two-Stream Fusion Network for Video Venue Prediction”, IEEE Transactions on Multimedia (TMM), 2020.

Download: