与传统物体检索相比,实例级图像检索有一系列难点,如:相同类别之间差异大(例如,光照,旋转,遮挡,裁剪等),类别与类别之间差异不大(可口可乐瓶与雪碧瓶),图像含有大量的干扰信息(如背景图像)以及有大量的未经标注的干扰图像等。最近的进展表明,卷积神经网络(CNN)可以提供了一个比传统方法更加优秀的图像特征表示方法。但是,卷积神经网络从整个图像中提取的特征包含大量的干扰信息,会导致检索性能达不到预期效果。为了解决这个问题,本文在项目组前期构建的实例级图像检索数据库(INSTRE:[Wang2015-ACM TOMM])等研究基础上, 提出了一个两阶段的实例级图像检索框架。通过在INSTRE等多个实例级图像检索数据集的实验证明了本文所提出框架的有效性。

[Wang2015-ACM TOMM] Shuang Wang, Shuqiang Jiang. INSTRE: A New Benchmark for Instance-Level Object Retrieval and Recognition. TOMM 11(3): 37:1-37:21 (2015)

Abstract

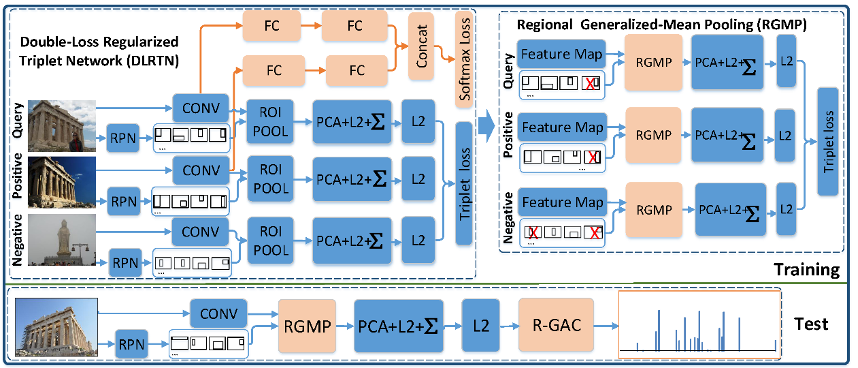

In this paper, we propose a novel framework for instance-level image retrieval. Recent methods focus on fine-tuning the Convolutional Neural Network (CNN) via a Siamese architecture to improve off-the-shelf CNN features. They generally use the ranking loss to train such networks, and do not take full use of supervised information for better network training, especially with more complex neural architectures. To solve this, we propose a two-stage triplet network training framework, which mainly consists of two stages. First, we propose a Double-Loss Regularized Triplet Network (DLRTN), which extends basic triplet network by attaching the classification sub-network, and is trained via simultaneously optimizing two different types of loss functions. Double-loss functions of DLRTN aim at specific retrieval task and can jointly boost the discriminative capability of DLRTN from different aspects via supervised learning. Second, considering feature maps of the last convolution layer extracted from DLRTN and regions detected from the region proposal network as the input, we then introduce the Regional Generalized-Mean Pooling (RGMP) layer for the triplet network, and re-train this network to learn pooling parameters. Through RGMP, we pool feature maps for each region and aggregate features of different regions from each image to Regional Generalized Activations of Convolutions (R-GAC) as final image representation. R-GAC is capable of generalizing existing Regional Maximum Activations of Convolutions (R-MAC) and is thus more robust to scale and translation. We conduct the experiment on six image retrieval datasets including standard benchmarks and recently introduced INSTRE dataset. Extensive experimental results demonstrate the effectiveness of the proposed framework.

Weiqing Min, Shuhuan Mei, Zhuo Li, Shuqiang Jiang. A Two-Stage Triplet Network Training Framework for Image Retrieval. IEEE Transactions on Multimedia (2020, Accepted)

Download: