In April 11, the 1stMandarin Audio-Visual Speech Recognition Challenge at ACM ICMI 2019 was launched. This challenge was started by researchers from 5 institutes, including the Chinese Academy of Sciences, Imperial College London, University of Oxford and IBM Silicon Vallery Lab. This challenge aims at exploring the complementarity between visual and acoustic information in real-world speech recognition systems, and will be held at ACM International Conference on Multimodal Interaction (ICMI) 2019. This challenge is available for any researchers and students, who are interested in pattern recognition, computer vision, image processing, speech recognition and so on.

There are 3 tasks in total. They are close-set word-level audio / visual / audio-visual speech recognition, open-set word-level visual/audio-visual speech recognition and visual based keyword spotting. And thanks to the support by SeetaTech, there would be corresponding bonus to the team in the first or second or third place. Besides the challenge tasks, we also call for papers on the following topics, including but not limited to lip reading, active voice detection, audio/visual based keyword spotting, taking face generation, visual information aided speech enhancement, audio-visual separation, sound localization, audio-visual based self-supervised learning, and so on.

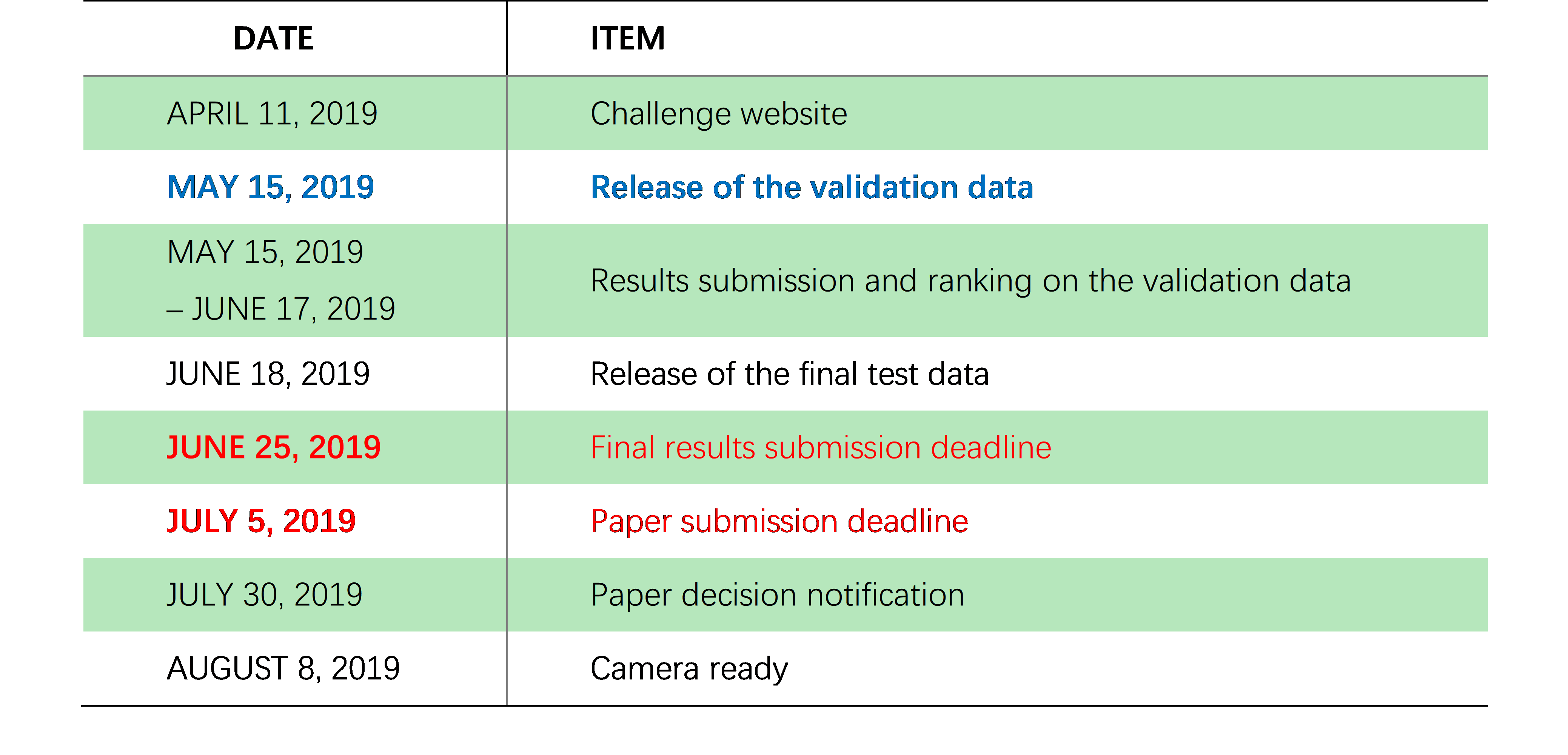

The corresponding deadlines are:

For more details,please go to http://vipl.ict.ac.cn/homepage/mavsr/index.html,or scan the QR code below to visit the website. If you have any questions, please feel free to contact lipreading@vipl.ict.ac.cn 。

Download: