Congratulations! VIPL’s paper on embodied ObjectGoal navigation, "HOZ++: Versatile Hierarchical Object-to-Zone Graph for Object Navigation" (authors: Sixian Zhang, Xinhang Song, Xinyao Yu, Yubing Bai, Xinlong Guo, Weijie Li, Shuqiang Jiang), was accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI). T-PAMI is a tie-1 journal in the fields of pattern recognition, computer vision, and machine learning, with an impact factor of 20.8 in 2024.

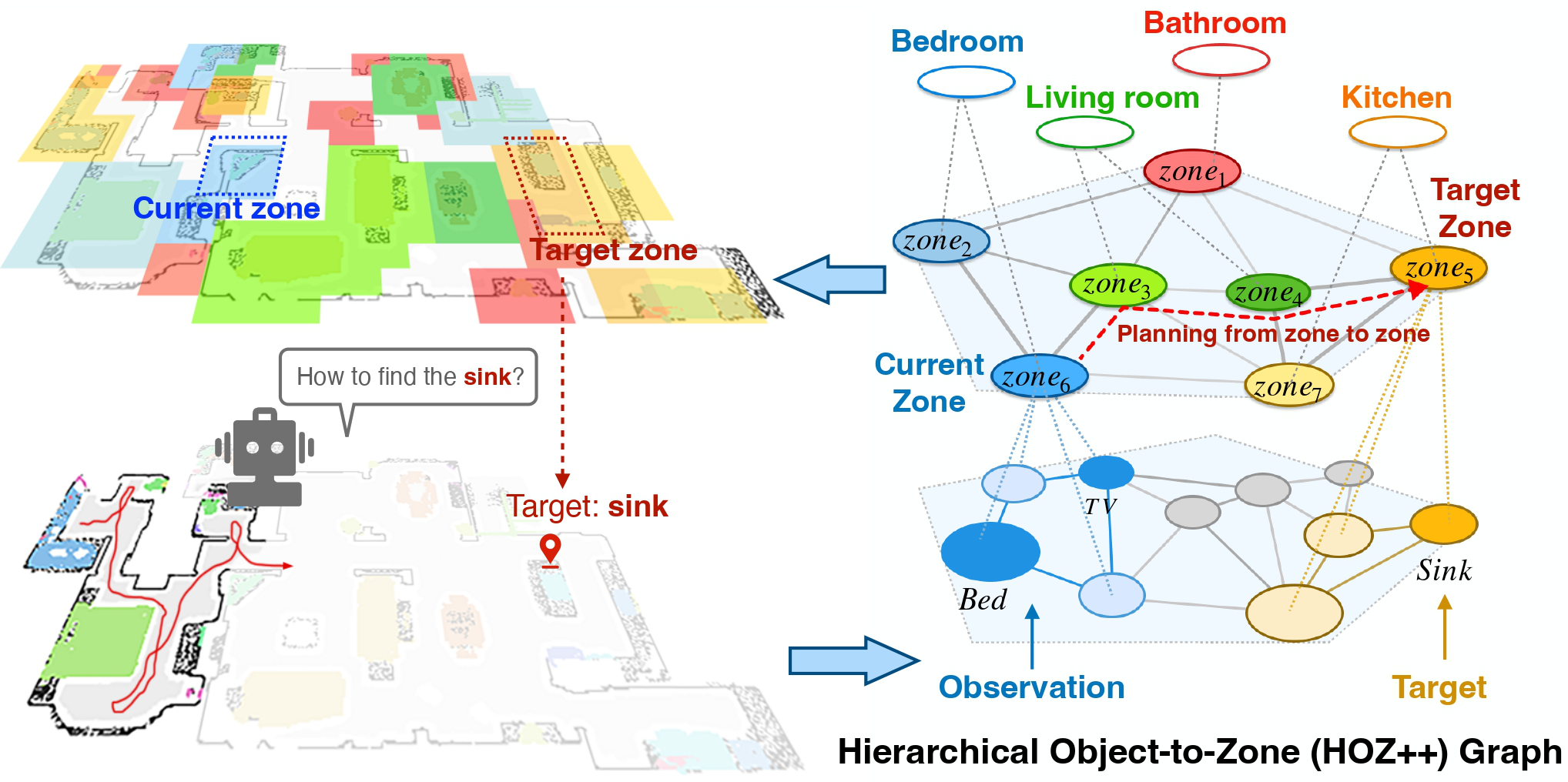

The goal of object navigation task is to reach the expected objects using visual information in unseen environments. Previous works typically implement deep models as agents that are trained to predict actions based on visual observations. Despite extensive training, agents often fail to make wise decisions when navigating in unseen environments toward invisible targets. In contrast, humans demonstrate a remarkable talent to navigate toward targets even in unseen environments. This superior capability is attributed to the cognitive map in the hippocampus, which enables humans to recall past experiences in similar situations and anticipate future occurrences during navigation. It is also dynamically updated with new observations from unseen environments. The cognitive map equips humans with a wealth of prior knowledge, significantly enhancing their navigation capabilities. Inspired by human navigation mechanisms, we propose the Hierarchical Object-to-Zone (HOZ++) graph, which encapsulates the regularities among objects, zones, and scenes. The HOZ++ graph helps the agent to identify the current zone and the target zone, and computes an optimal path between them, then selects the next zone along the path as the guidance for the agent. Moreover, the HOZ++ graph continuously updates based on real-time observations in new environments, thereby enhancing its adaptability to new environments. Our HOZ++ graph is versatile and can be integrated into existing methods, including end-to-end RL and modular methods. Our method is evaluated across four simulators, including AI2-THOR, RoboTHOR, Gibson, and Matterport 3D. Additionally, we build a realistic environment to evaluate our method in the real world. Experimental results demonstrate the effectiveness and efficiency of our proposed method.

Download: