1. Overview

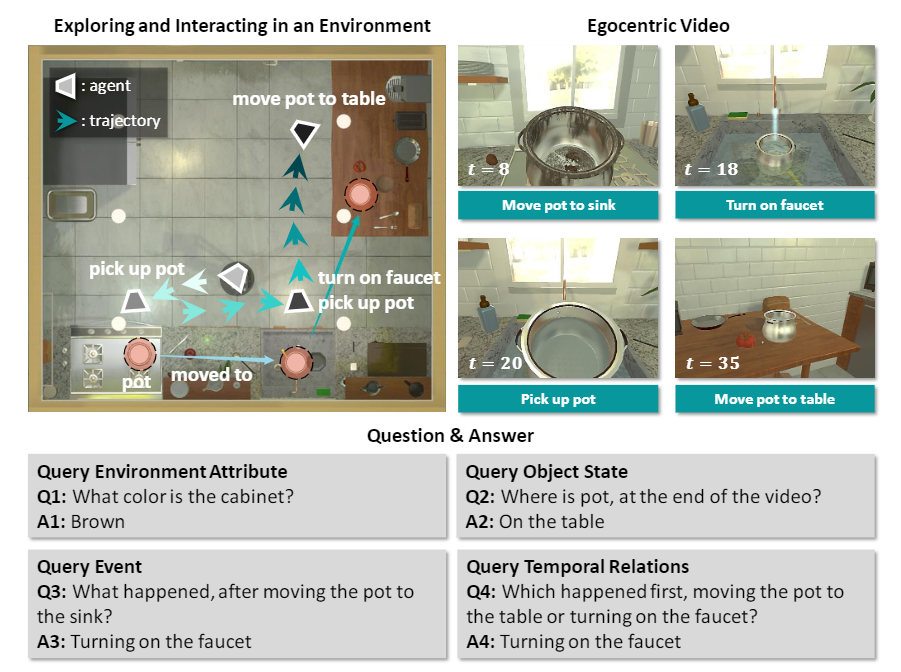

Visual understanding goes well beyond the study of images or videos on the web. To achieve complex tasks in volatile situations, the human can deeply understand the environment, quickly perceive events happening around, and continuously track objects' state changes, which are still challenging for current AI systems. To equip AI system with the ability to understand dynamic ENVironments, we build a video Question Answering dataset named Env-QA. Env-QA contains 23K egocentric videos, where each video is composed of a series of events about exploring and interacting in the environment. It also provides 85K questions to evaluate the ability of understanding the composition, layout, and state changes of the environment presented by the events in videos.

2. Data Annotation

Video: Env-QA uses the recently released AI2-THOR simulator to collect 23,261 egocentric videos about exploring and interacting in the environment. These videos involve a total of 15 types of basic actions, 115 types of objects, and 120 indoor simulation environments.

Question: Env-QA provides 5 types of questions to evaluate dynamic environment understanding from different aspects, including querying object attribute, object state, event, temporal order of events, and counting number of events or objects, in a total of 85,072 questions.

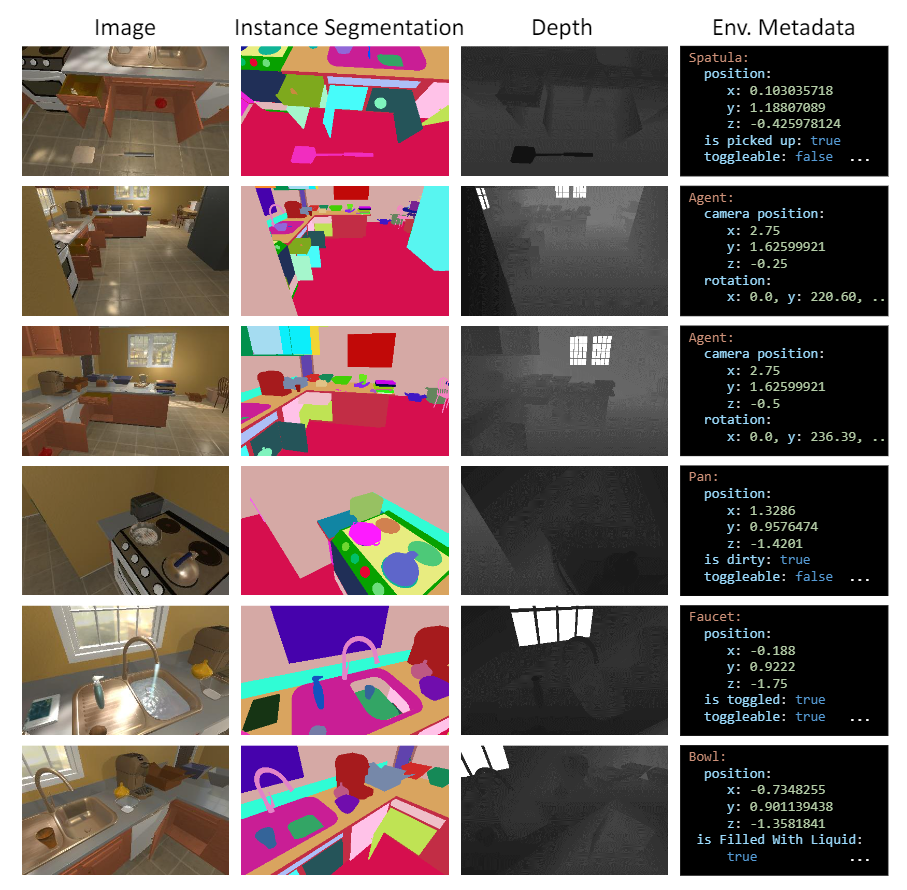

Additional Annotations: Thanks to the AI2-THOR simulator, we also record the environment meta-data of each video for better diagnosing the models, including the depth map, the instance segmentation map, and the objects’ pose and state.

3. Contact

Ruiping Wang (wangruiping@ict.ac.cn), Institute of Computing Technology, Chinese Academy of Sciences

Difei Gao (difei.gao@vipl.ict.ac.cn), Institute of Computing Technology, Chinese Academy of Sciences

4. Download

The Env-QA dataset is released for research purpose only. To request a copy, please access the https://envqa.github.io/. By using the Env-QA dataset, you are recommended to refer to the following paper:

Difei Gao, Ruiping Wang, Ziyi Bai, and Xilin Chen. "Env-QA: A Video Question Answering Benchmark for Comprehensive Understanding of Dynamic Environments." In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1675-1685. 2021. [bibtex]

附件下载: