1. Overview

The VIPL-MumoFace-3K (RGB-D-NIR) dataset aims at aiding research efforts in the general area of developing, testing, and evaluating cross-modality face synthesis and recognition. Due to IRB issues, we are not able to release all the subjects’ data of VIPL-MumoFace-3K. Here we release a subset of VIPL-MumoFace-3K, containing the data of 2,000 subjects (VIPL-MumoFace-2K) who have signed agreement to allow using their images for research purpose and presenting their images in papers and reports. The released VIPL-MumoFace-2K dataset contains 163,559 RGB-NIR-D image pairs, which are aligned based on facial landmarks and cropped to the size of 256*256. More details can be found in the “Readme” file provided in the data package.

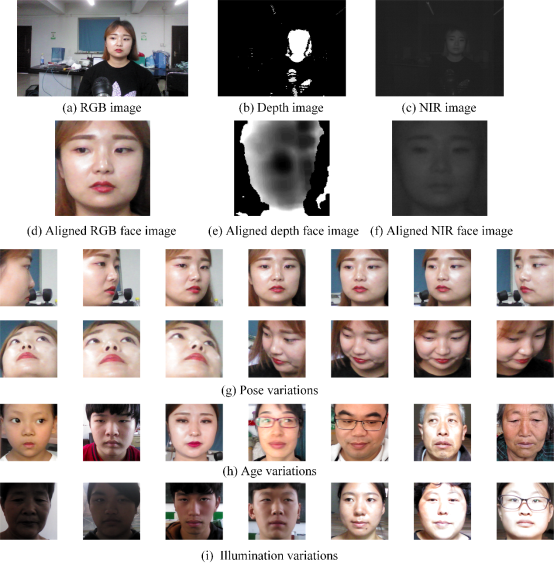

Figure 1. Example images from the VIPL-MumoFace-2K (RGB-D-NIR) dataset. (a-c) show the original RGB (visible), depth, and NIR images, (d-f) show the corresponding aligned face images of 256*256, (g-i) show the pose, age, and illumination variations in the database.

2. Contact

Hu Han (hanhu[at]ict.ac.cn), Institute of Computing Technology, Chinese Academy of Sciences

Shikang Yu (shikang.yu[at]vipl.ict.ac.cn), Institute of Computing Technology, Chinese Academy of Sciences

3. Download

The VIPL-MumoFace-2K dataset is released to universities and research institutes for research purpose only. We are NOT able to share the data with any companies because we do not obtain the permission from the participants of our dataset collection. To request a copy of the database, please do as follows:

Download the VIPL-MumoFace-2K Release Agreement, read it carefully, and complete it appropriately. Note that the agreement should be signed by a full-time staff member (that is, student is not acceptable). Then, please scan the signed agreement and send it to Dr. Han (hanhu[at]ict.ac.cn) and Mr. Yu (shikang.yu[at]vipl.ict.ac.cn) using an official email address (that is, university or institute email address, and non-official email addresses such as Gmail and 163 are not acceptable). When we receive your reply, we would provide the download link to you.

3. Publication

Please cite the following paper if you used the VIPL-MumoFace-2K dataset in any forms:

S. Yu, H. Han, S. Shan and X. Chen. CMOS-GAN: Semi-Supervised Generative Adversarial Model for Cross-Modality Face Image Synthesis. IEEE Transactions on Image Processing, vol. 32, pp. 144-158, 2023.

附件下载: