Recently, VIPL's paper on facial action unit detection was accepted by IEEE TPAMI. IEEE PAMI, i.e, IEEE Transactions on Pattern Analysis and Machine Intelligence is a CCF-ranked-A top-tier Artificial Intelligence journal with a high IF score as 17.73. Below is a brief introduction of this work.

Yong Li, Jiabei Zeng, Shiguang Shan*. “Learning Representations for Facial Actions from Unlabeled Videos”, IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2020. (Accepted)

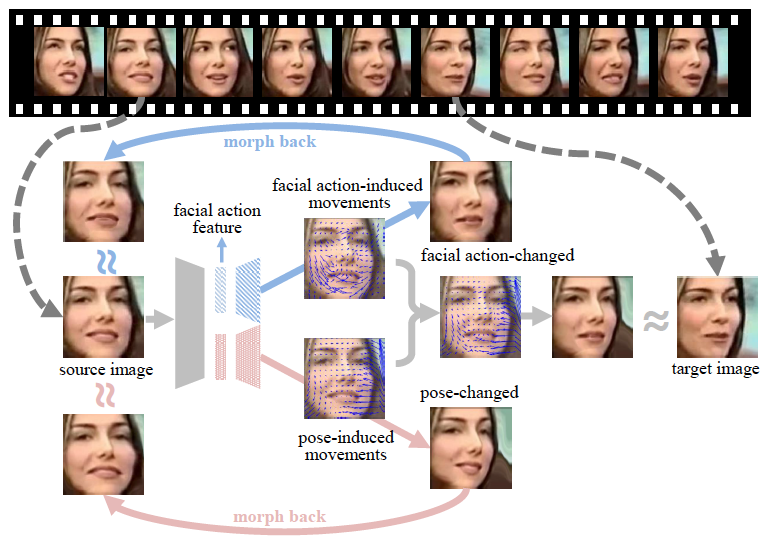

The facial action coding system (FACS) is the most comprehensive, anatomical system for encoding expression. FACS defines a unique set of about 30 atomic non-overlapping facial muscle actions called Action Units (AUs), nearly any anatomical facial activity can be described with combinations of AUs. Automatic AU detection has drawn significant interest from computer scientists and psychologists over recent decades, as it holds promise to an abundance of applications, such as affect analysis, mental health assessment, and human-computer interaction. However, the labelling of AUs demands expertise and thus is time-consuming and expensive. To alleviate the labelling demand, we propose to leverage the large number of unlabeled videos by proposing a Twin-cycle Autoencoder (TAE) to learn discriminative representation for facial actions. TAE is inspired by the fact that facial actions are embedded in the pixel-wise displacements between two sequential face images (hereinafter, source and target) in the video, learning the representations of facial actions can be achieved by learning the representations of the displacements. However, the displacements induced by facial actions are entangled with those induced by head motions. TAE is thus trained to disentangle the two kinds of movements by evaluating the quality of the synthesized images when either the facial actions or head pose is changed, aiming to reconstruct the target image. The framework of TAE is illustrated in the Fig.1. Experiments on AU detection show that TAE-learned representations are discriminative for facial actions and TAE is comparable to other existing AU detection methods. TAE’s ability in decoupling the action-induced and pose-induced movements is also validated by visualizing the generated images and analyzing the facial image retrieval results qualitatively and quantitatively.

Figure 1 Main idea of the proposed TAE

Download: