Recently, VIPL's 2 papers are accepted by the journal IEEE TIP. The full name of IEEE TIP is Transactions on Image Processing, which is an international journal on computer vision and image processing.

1. Motion Feature Aggregation for Video-based Person Re-identification (Xinqian Gu, Hong Chang, Bingpeng Ma and Shiguang Shan)

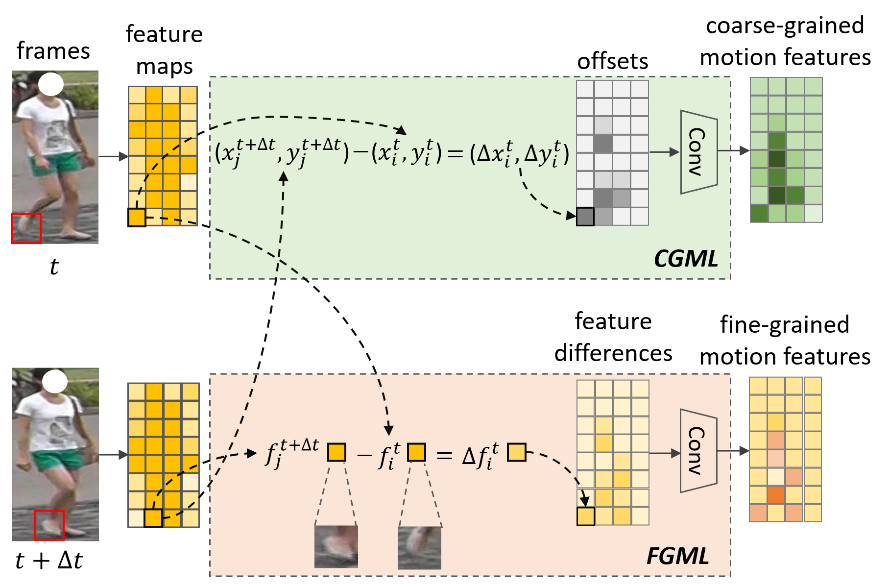

Most video-based person re-identification (re-id) methods only focus on appearance features but neglect motion features. In fact, motion features can help to distinguish the target persons that are hard to be identified only by appearance features. However, most existing temporal information modeling methods cannot extract motion features effectively or efficiently for video-based re-id. In this paper, we propose a more efficient Motion Feature Aggregation (MFA) method to model and aggregate motion information in the feature map level for video-based re-id. The proposed MFA consists of (i) a coarse-grained motion learning module, which extracts coarse-grained motion features based on the position changes of body parts over time, and (ii) a fine-grained motion learning module, which extracts fine-grained motion features based on the appearance changes of body parts over time. These two modules can model motion information from different granularities and are complementary to each other. It is easy to combine the proposed method with existing network architectures for end-to-end training. Extensive experiments on four widely used datasets demonstrate that the motion features extracted by MFA are crucial complements to appearance features for video-based re-id, especially for the scenario with large appearance changes. Besides, the results on LS-VID, the current largest publicly available video-based re-id dataset, surpass the state-of-the-art methods by a large margin.

2. Interactive regression and classification for dense object detector (Linmao Zhou, Hong Chang, Bingpeng Ma and Shiguang Shan)

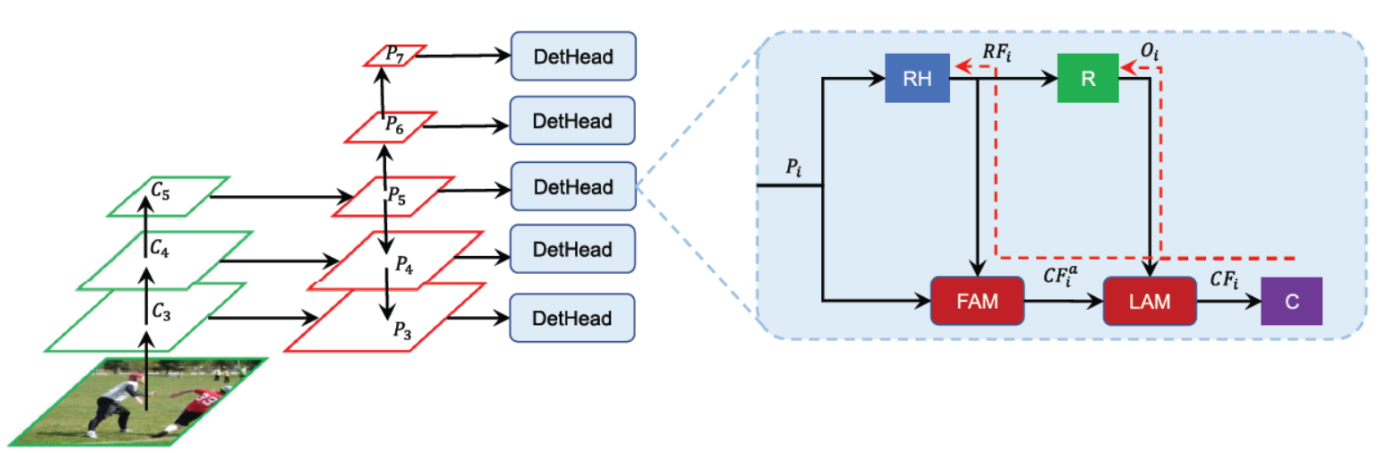

In object detection, enhancing feature representation using localization information has been revealed as a crucial procedure to improve detection performance. However, the localization information (i.e., regression feature and regression offset) captured by the regression branch is still not well utilized. In this paper, we propose a simple but effective method called Interactive Regression and Classification (IRC) to better utilize localization information. Specifically, we propose Feature Aggregation Module (FAM) and Localization Attention Module (LAM) to leverage localization information to the classification branch during forward propagation. Furthermore, the classifier also guides the learning of the regression branch during backward propagation, to guarantee that the localization information is beneficial to both regression and classification. Thus, the regression and classification branches are learned in an interactive manner. Our method can be easily integrated into anchor-based and anchor-free object detectors without increasing computation cost. With our method, the performance is significantly improved on many popular dense object detectors, including RetinaNet, FCOS, ATSS, PAA, GFL, GFLV2, OTA, GA-RetinaNet, RepPoints, BorderDet and VFNet. Based on ResNet-101 backbone, IRC achieves 47.2% AP on COCO test-dev, surpassing the previous state-of-the-art PAA (44.8% AP), GFL (45.0% AP) and without sacrificing the efficiency both in training and inference. Moreover, our best model (Res2Net-101-DCN) can achieve a single-model single-scale AP of 51.4%.

Download: