Congratulations! VIPL's 5 papers are accepted by CVPR 2022! CVPR is a top international conference on computer vision, pattern recognition and artificial intelligence hosted by IEEE. The 5 papers are summarized as follows :

1. Salient-to-Broad Transition for Video Person Re-identification. (Shutao Bai, Bingpeng Ma, Hong Chang, Rui Huang and Xilin Chen)

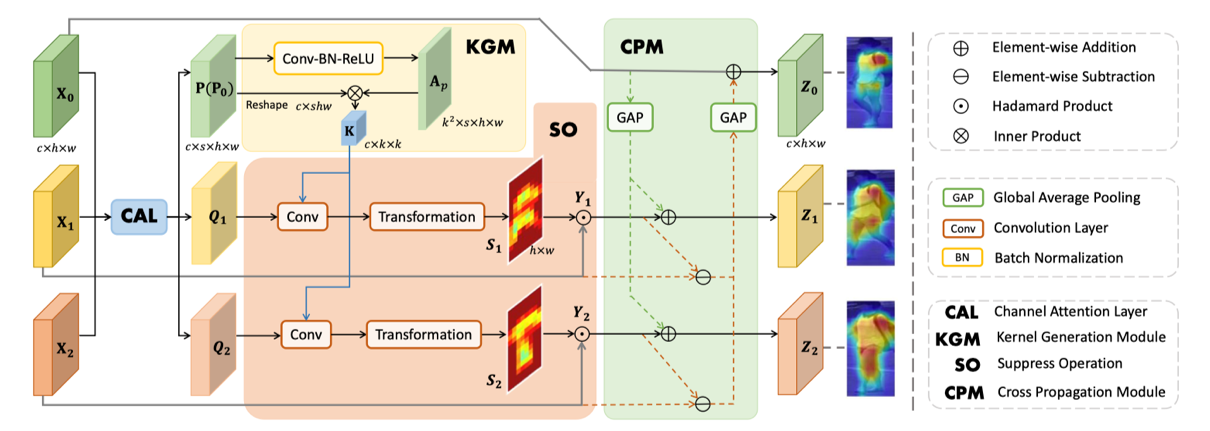

Due to the limited utilization of temporal relations in video re-id, the frame-level attention regions of mainstream methods are partial and highly similar. To address this problem, we propose a Salient-to-Broad Module (SBM) to enlarge the attention regions gradually. The enlarged regions will cover the entire foreground of the pedestrian and thus provide complete descriptions of the target pedestrian. Specifically, in SBM, while the previous frames have focused on the most salient regions, the latter tend to focus on broader regions. In this way, additional information in broad regions can supplement salient regions, incurring more powerful and discriminative video-level representations. To further improve SBM, an Integration-and-Distribution Module (IDM) is introduced to enhance frame-level representations. IDM first integrates features from the entire feature space and then distributes the integrated features to each spatial location. Such integration-distribution operation can model the long-range dependencies in the temporal-spatial dimension. SBM and IDM are mutually beneficial since they enhance the representations from video-level and frame-level, respectively. Extensive experiments on four prevalent benchmarks demonstrate the effectiveness and superiority of our method.

Figure 1. The overall architecture of Salient-to-Broad Module.

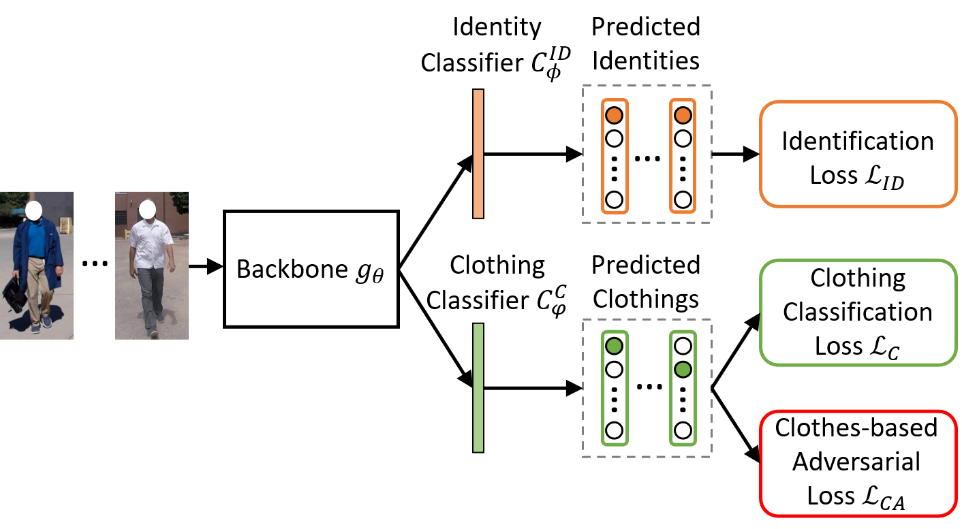

2. Clothes-Changing Person Re-identification with RGB Modality Only. (Xinqian Gu, Hong Chang, Bingpeng Ma, Shutao Bai, Shiguang Shan and Xilin Chen)

The key to address clothes-changing person reidentification (re-id) is to extract clothes-irrelevant features, e.g., face, hairstyle, body shape, and gait. Most current works mainly focus on modeling body shape from multimodality information (e.g., silhouettes and sketches), but do not make full use of the clothes-irrelevant information in the original RGB images. In this paper, we propose a Clothes-based Adversarial Loss (CAL) to mine clothesirrelevant features from the original RGB images by penalizing the predictive power of re-id model w.r.t. clothes. Extensive experiments demonstrate that using RGB images only, CAL outperforms all state-of-the-art methods on widely-used clothes-changing person re-id benchmarks. Besides, compared with images, videos contain richer appearance and additional temporal information, which can be used to model proper spatiotemporal patterns to assist clothes-changing re-id. Since there is no publicly available clothes-changing video re-id dataset, we contribute a new dataset named CCVID and show that there exists much room for improvement in modeling spatiotemporal information.

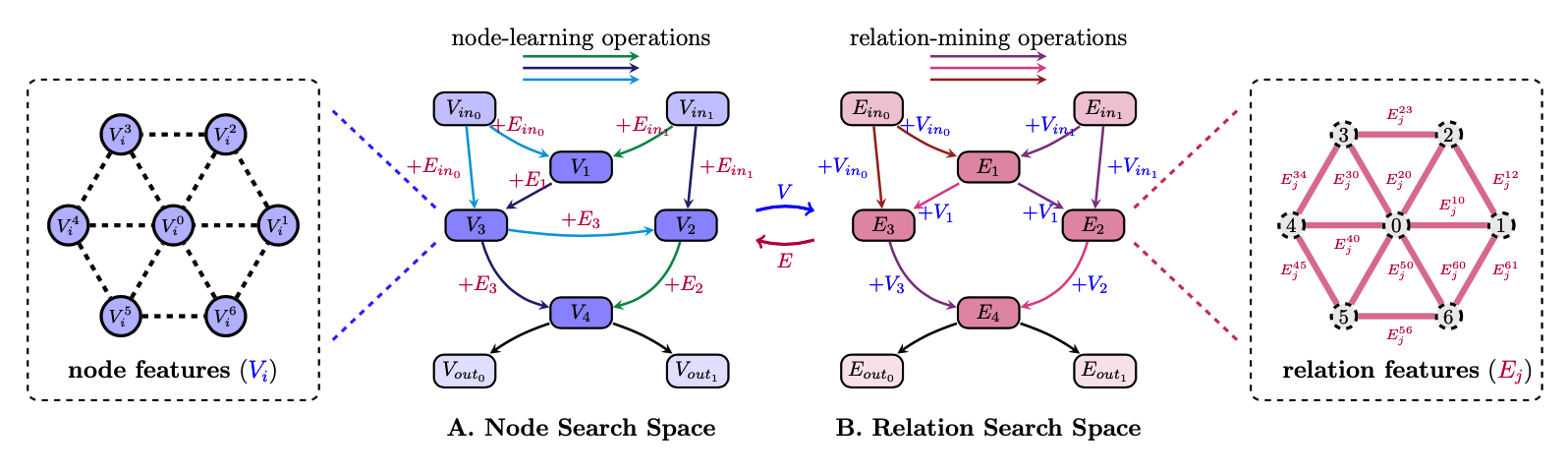

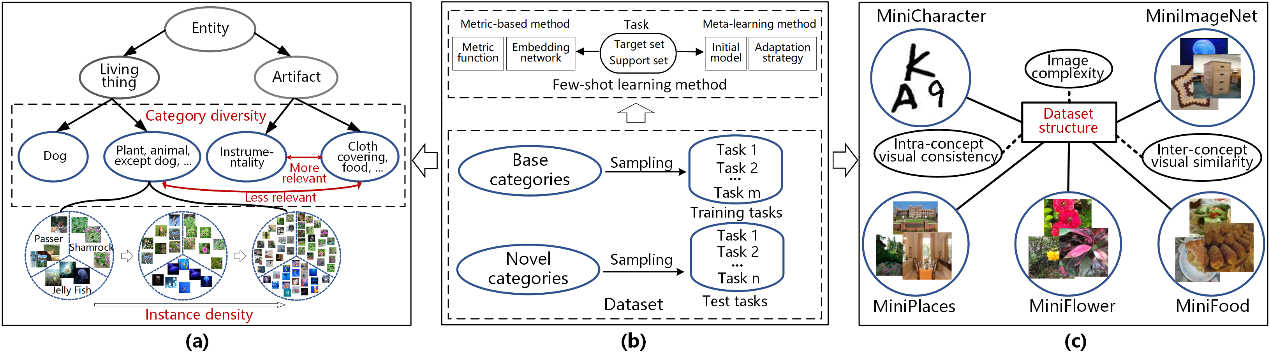

3. Automatic Relation-aware Graph Network Proliferation. (Shaofei Cai, Liang Li, Xinzhe Han, Jiebo Luo, Zhengjun Zha and Qingming Huang)

Graph neural architecture search has sparked much attention as Graph Neural Networks (GNNs) have shown powerful reasoning capability in many relational tasks. However, the currently used graph search space overemphasizes learning node features and neglects mining hierarchical relational information. Moreover, due to diverse mechanisms in the message passing, the graph search space is much larger than that of CNNs. This hinders the straightforward application of classical search strategies for exploring complicated graph search space. We propose Automatic Relation-aware Graph Network Proliferation (ARGNP) for efficiently searching GNNs with a relation-guided message passing mechanism. Specifically, we first devise a novel dual relation-aware graph search space that comprises both node and relation learning operations. These operations can extract hierarchical node/relational information and provide anisotropic guidance for message passing on a graph. Second, analogous to cell proliferation, we design a network proliferation search paradigm to progressively determine the GNN architectures by iteratively performing network division and differentiation. The experiments on six datasets for four graph learning tasks demonstrate that GNNs produced by our method are superior to the current state-of-the-art hand- crafted and search-based GNNs.

4. Few Shot Generative Model Adaption via Relaxed Spatial Structural Alignment. (Jiayu Xiao, Liang Li, Chaofei Wang, Zhengjun Zha and Qingming Huang)

Training a generative adversarial network (GAN) with limited data has been a challenging task. A feasible solution is to start with a GAN well-trained on a large scale source domain and adapt it to the target domain with a few samples, termed as few shot generative model adaption. However, existing methods are prone to model overfitting and collapse in extremely few shot setting (less than 10). To solve this problem, we propose a relaxed spatial structural alignment method to calibrate the target generative models during the adaption. We design a cross-domain spatial structural consistency loss comprising the self-correlation and disturbance correlation consistency loss. It helps align the spatial structural information between the synthesis image pairs of the source and target domains. To relax the cross-domain alignment, we compress the original latent space of generative models to a subspace. Image pairs generated from the subspace are pulled closer. We further design a metric to evaluate the quality of synthesis images from the structural perspective, which can serve as an alternative supplement to the current metrics. Qualitative and quantitative experiments show that our method consistently surpasses the state-of-the-art methods in few shot setting.

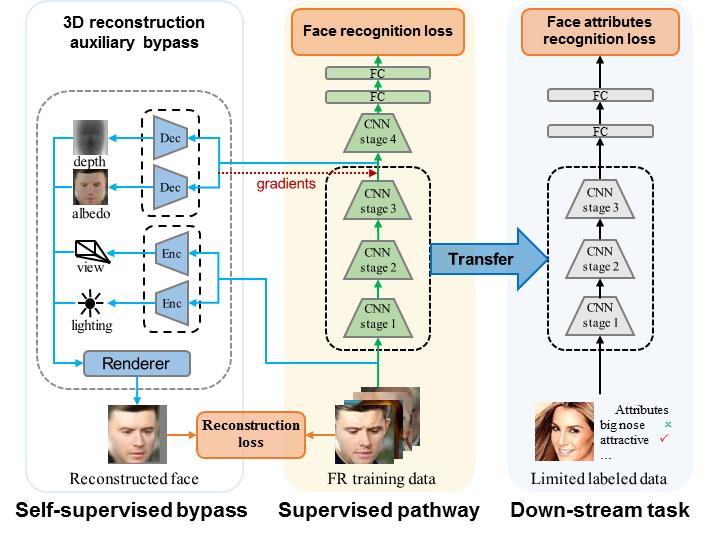

5. Enhancing Face Recognition with Self-Supervised 3D Reconstruction. (Mingjie He, Jie Zhang, Shiguang Shan and Xilin Chen)

Attributed to both the development of deep networks and abundant data, automatic face recognition (FR) has quickly reached human-level capacity in the past few years. However, the FR problem is not perfectly solved in case of uncontrolled illumination and pose. In this paper, we propose to enhance face recognition with a bypass of self-supervised 3D reconstruction, which enforces the neural backbone to focus on the identity-related depth and albedo information while neglects the identity-irrelevant pose and illumination information. Specifically, inspired by the physical model of image formation, we improve the backbone FR network by introducing a 3D face reconstruction loss with two auxiliary networks. The first one estimates the pose and illumination from the input face image while the second one decodes the canonical depth and albedo from the intermediate feature of the FR backbone network. The whole network is trained in end-to-end manner with both classic face identification loss and the loss of 3D face reconstruction with the physical parameters. In this way, the self-supervised reconstruction acts as a regularization that enables the recognition network to understand faces in 3D view, and the learnt features are forced to encode more information of canonical facial depth and albedo, which is more intrinsic and beneficial to face recognition. Extensive experimental results on various face recognition benchmarks show that, without any cost of extra annotations and computations, our method outperforms the state-of-the-art ones. Moreover, the learnt representations can also well generalize to other face-related downstream tasks such as the facial attribute recognition with limited labeled data.

Download: