Congratulations! VIPL's paper on the robust structure design about segmentation model “Towards Robust Semantic Segmentation against Patch-based Attack via Attention Refinement” (Authors: Zheng Yuan, Jie Zhang*, Yude Wang, Shiguang Shan, Xilin Chen) was accepted by IJCV. IJCV, i.e., International Journal of Computer Vision is a CCF-ranked-A top-tier Artificial Intelligence journal with a high IF score of 19.5, announced in 2022.

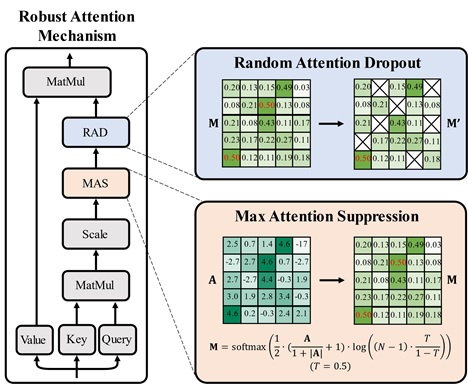

The attention mechanism has been proven effective on various visual tasks in recent years. In the semantic segmentation task, the attention mechanism is applied in various methods, including the case of both Convolution Neural Networks (CNN) and Vision Transformer (ViT) as backbones. However, we observe that the attention mechanism is vulnerable to patch-based adversarial attacks. Through the analysis of the effective receptive field, we attribute it to the fact that the wide receptive field brought by global attention may lead to the spread of the adversarial patch. To address this issue, in this paper, we propose a Robust Attention Mechanism (RAM) to improve the robustness of the semantic segmentation model, which can notably relieve the vulnerability against patch-based attacks. Compared to the vallina attention mechanism, RAM introduces two novel modules called Max Attention Suppression and Random Attention Dropout, both of which aim to refine the attention matrix and limit the influence of a single adversarial patch on the semantic segmentation results of other positions. Extensive experiments demonstrate the effectiveness of our RAM to improve the robustness of semantic segmentation models against various patch-based attack methods under different attack settings.

Fig. 1: The structure of our proposed Robust Attention Mechanism (RAM), which introduces two novel modules called Max Attention Suppression (MAS) and Random Attention Dropout (RAD)

Download: